braindecode.models.AttnSleep#

- class braindecode.models.AttnSleep(sfreq=None, n_tce=2, d_model=80, d_ff=120, n_attn_heads=5, drop_prob=0.1, activation_mrcnn=<class 'torch.nn.modules.activation.GELU'>, activation=<class 'torch.nn.modules.activation.ReLU'>, input_window_seconds=None, n_outputs=None, after_reduced_cnn_size=30, return_feats=False, chs_info=None, n_chans=None, n_times=None)[source]#

Sleep Staging Architecture from Eldele et al (2021) [Eldele2021].

Convolution Attention/Transformer

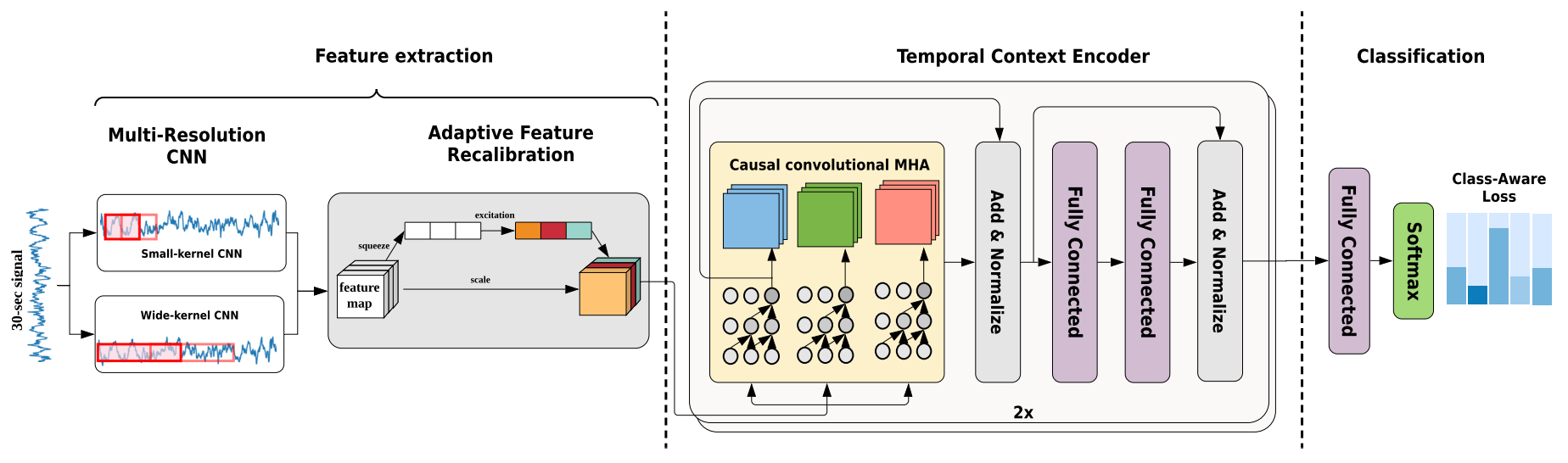

Attention based Neural Net for sleep staging as described in [Eldele2021]. The code for the paper and this model is also available at [1]. Takes single channel EEG as input. Feature extraction module based on multi-resolution convolutional neural network (MRCNN) and adaptive feature recalibration (AFR). The second module is the temporal context encoder (TCE) that leverages a multi-head attention mechanism to capture the temporal dependencies among the extracted features.

Warning - This model was designed for signals of 30 seconds at 100Hz or 125Hz (in which case the reference architecture from [1] which was validated on SHHS dataset [2] will be used) to use any other input is likely to make the model perform in unintended ways.

- Parameters:

n_tce (int) – Number of TCE clones.

d_model (int) – Input dimension for the TCE. Also the input dimension of the first FC layer in the feed forward and the output of the second FC layer in the same. Increase for higher sampling rate/signal length. It should be divisible by n_attn_heads

d_ff (int) – Output dimension of the first FC layer in the feed forward and the input dimension of the second FC layer in the same.

n_attn_heads (int) – Number of attention heads. It should be a factor of d_model

drop_prob (float) – Dropout rate in the PositionWiseFeedforward layer and the TCE layers.

after_reduced_cnn_size (int) – Number of output channels produced by the convolution in the AFR module.

return_feats (bool) – If True, return the features, i.e. the output of the feature extractor (before the final linear layer). If False, pass the features through the final linear layer.

n_classes (int) – Alias for n_outputs.

input_size_s (float) – Alias for input_window_seconds.

activation (nn.Module, default=nn.ReLU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ReLU.activation_mrcnn (nn.Module, default=nn.ReLU) – Activation function class to apply in the Mask R-CNN layer. Should be a PyTorch activation module class like

nn.ReLUornn.GELU. Default isnn.GELU.

References

Methods

- forward(x)[source]#

Forward pass.

- Parameters:

x (torch.Tensor) – Batch of EEG windows of shape (batch_size, n_channels, n_times).

- Return type:

Examples using braindecode.models.AttnSleep#

Sleep staging on the Sleep Physionet dataset using Eldele2021