braindecode.models.FBCNet#

- class braindecode.models.FBCNet(n_chans=None, n_outputs=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, n_bands=9, n_filters_spat=32, temporal_layer='LogVarLayer', n_dim=3, stride_factor=4, activation=<class 'torch.nn.modules.activation.SiLU'>, linear_max_norm=0.5, cnn_max_norm=2.0, filter_parameters=None)[source]#

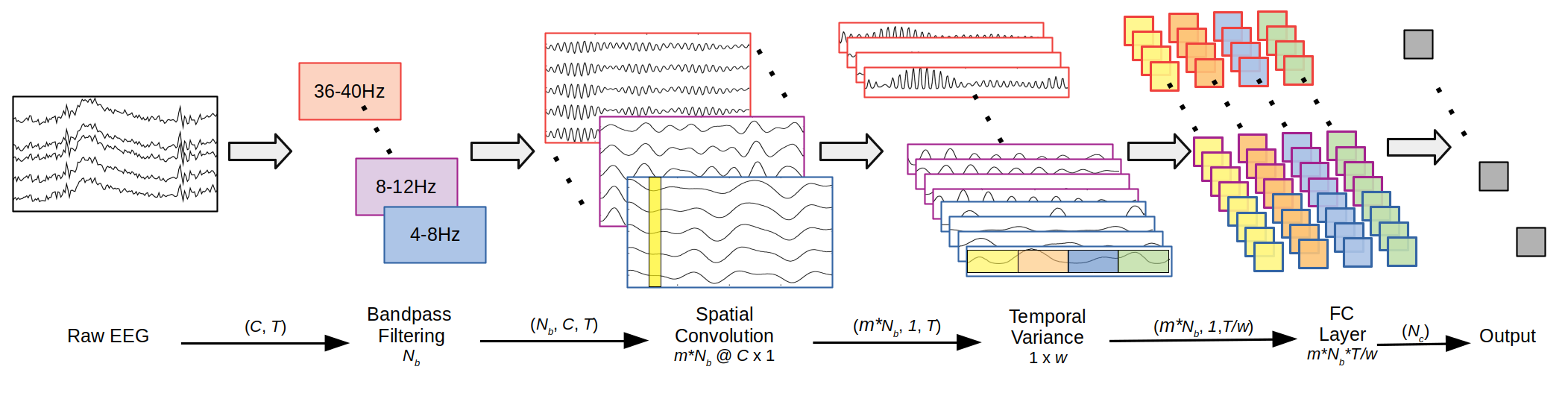

FBCNet from Mane, R et al (2021) [fbcnet2021].

Convolution Filterbank

The FBCNet model applies spatial convolution and variance calculation along the time axis, inspired by the Filter Bank Common Spatial Pattern (FBCSP) algorithm.

Notes

This implementation is not guaranteed to be correct and has not been checked by the original authors; it has only been reimplemented from the paper description and source code [fbcnetcode2021]. There is a difference in the activation function; in the paper, the ELU is used as the activation function, but in the original code, SiLU is used. We followed the code.

- Parameters:

n_bands (int or None or list[tuple[int, int]]], default=9) – Number of frequency bands. Could

n_filters_spat (int, default=32) – Number of spatial filters for the first convolution.

n_dim (int, default=3) – Number of dimensions for the temporal reductor

temporal_layer (str, default='LogVarLayer') – Type of temporal aggregator layer. Options: ‘VarLayer’, ‘StdLayer’, ‘LogVarLayer’, ‘MeanLayer’, ‘MaxLayer’.

stride_factor (int, default=4) – Stride factor for reshaping.

activation (nn.Module, default=nn.SiLU) – Activation function class to apply in Spatial Convolution Block.

cnn_max_norm (float, default=2.0) – Maximum norm for the spatial convolution layer.

linear_max_norm (float, default=0.5) – Maximum norm for the final linear layer.

filter_parameters (dict, default None) – Dictionary of parameters to use for the FilterBankLayer. If None, a default Chebyshev Type II filter with transition bandwidth of 2 Hz and stop-band ripple of 30 dB will be used.

References

[fbcnet2021]Mane, R., Chew, E., Chua, K., Ang, K. K., Robinson, N., Vinod, A. P., … & Guan, C. (2021). FBCNet: A multi-view convolutional neural network for brain-computer interface. preprint arXiv:2104.01233.

[fbcnetcode2021]Link to source-code: ravikiran-mane/FBCNet

Methods

- forward(x)[source]#

Forward pass of the FBCNet model.

- Parameters:

x (torch.Tensor) – Input tensor with shape (batch_size, n_chans, n_times).

- Returns:

Output tensor with shape (batch_size, n_outputs).

- Return type: