braindecode.models.EEGSimpleConv#

- class braindecode.models.EEGSimpleConv(n_outputs=None, n_chans=None, sfreq=None, feature_maps=128, n_convs=2, resampling_freq=80, kernel_size=8, return_feature=False, activation=<class 'torch.nn.modules.activation.ReLU'>, chs_info=None, n_times=None, input_window_seconds=None)[source]#

EEGSimpleConv from Ouahidi, YE et al (2023) [Yassine2023].

Convolution

EEGSimpleConv is a 1D Convolutional Neural Network originally designed for decoding motor imagery from EEG signals. The model aims to have a very simple and straightforward architecture that allows a low latency, while still achieving very competitive performance.

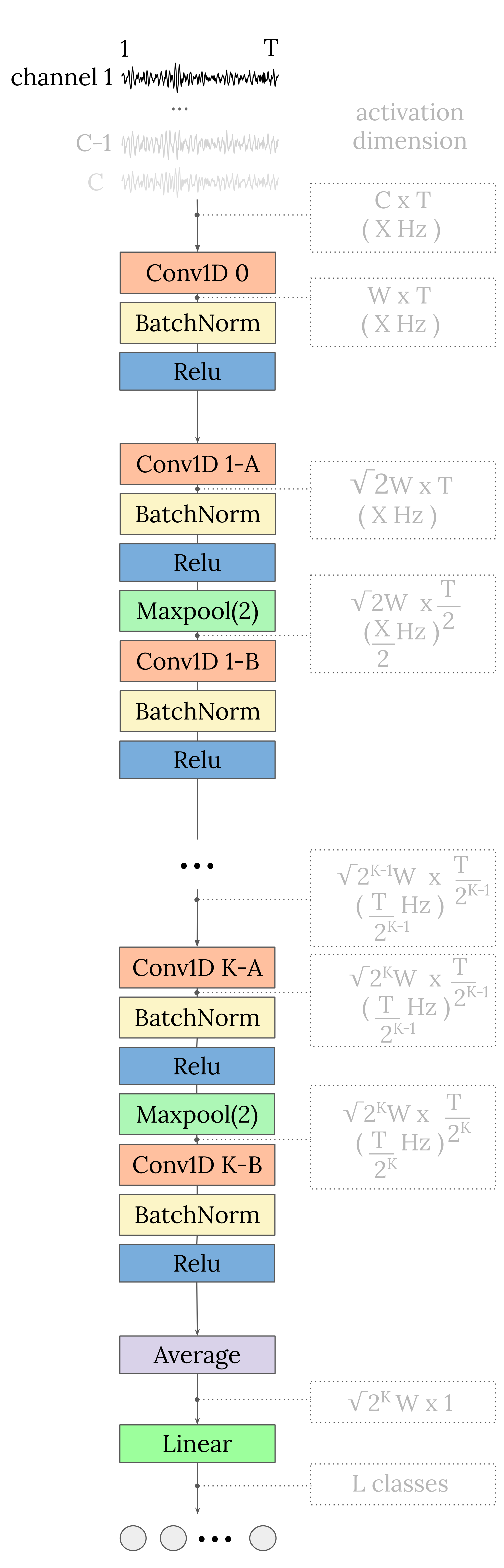

EEG-SimpleConv starts with a 1D convolutional layer, where each EEG channel enters a separate 1D convolutional channel. This is followed by a series of blocks of two 1D convolutional layers. Between the two convolutional layers of each block is a max pooling layer, which downsamples the data by a factor of 2. Each convolution is followed by a batch normalisation layer and a ReLU activation function. Finally, a global average pooling (in the time domain) is performed to obtain a single value per feature map, which is then fed into a linear layer to obtain the final classification prediction output.

The paper and original code with more details about the methodological choices are available at the [Yassine2023] and [Yassine2023Code].

The input shape should be three-dimensional matrix representing the EEG signals.

(batch_size, n_channels, n_timesteps).Notes

The authors recommend using the default parameters for MI decoding. Please refer to the original paper and code for more details.

Recommended range for the choice of the hyperparameters, regarding the evaluation paradigm.

Parameter | Within-Subject | Cross-Subject |feature_maps | [64-144] | [64-144] |n_convs | 1 | [2-4] |resampling_freq | [70-100] | [50-80] |kernel_size | [12-17] | [5-8] |An intensive ablation study is included in the paper to understand the of each parameter on the model performance.

Added in version 0.9.

- Parameters:

feature_maps (int) – Number of Feature Maps at the first Convolution, width of the model.

n_convs (int) – Number of blocks of convolutions (2 convolutions per block), depth of the model.

resampling (int) – Resampling Frequency.

kernel_size (int) – Size of the convolutions kernels.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.

References

[Yassine2023] (1,2)Yassine El Ouahidi, V. Gripon, B. Pasdeloup, G. Bouallegue N. Farrugia, G. Lioi, 2023. A Strong and Simple Deep Learning Baseline for BCI Motor Imagery Decoding. Arxiv preprint. arxiv.org/abs/2309.07159

[Yassine2023Code]Yassine El Ouahidi, V. Gripon, B. Pasdeloup, G. Bouallegue N. Farrugia, G. Lioi, 2023. A Strong and Simple Deep Learning Baseline for BCI Motor Imagery Decoding. GitHub repository. elouayas/EEGSimpleConv.

Methods