braindecode.models.FBMSNet#

- class braindecode.models.FBMSNet(n_chans=None, n_outputs=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, n_bands=9, n_filters_spat=36, temporal_layer='LogVarLayer', n_dim=3, stride_factor=4, dilatability=8, activation=<class 'torch.nn.modules.activation.SiLU'>, kernels_weights=(15, 31, 63, 125), cnn_max_norm=2, linear_max_norm=0.5, verbose=False, filter_parameters=None)[source]#

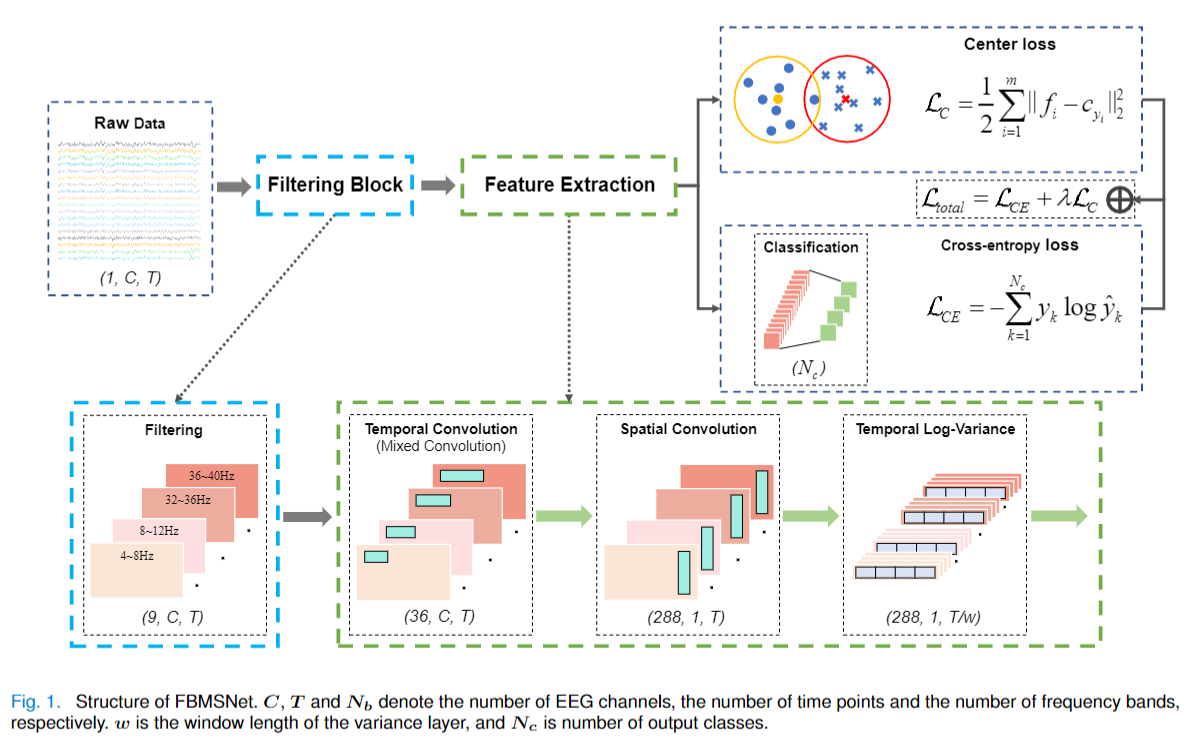

FBMSNet from Liu et al (2022) [fbmsnet].

Convolution Filterbank

FilterBank Layer: Applying filterbank to transform the input.

Temporal Convolution Block: Utilizes mixed depthwise convolution (MixConv) to extract multiscale temporal features from multiview EEG representations. The input is split into groups corresponding to different views each convolved with kernels of varying sizes. Kernel sizes are set relative to the EEG sampling rate, with ratio coefficients [0.5, 0.25, 0.125, 0.0625], dividing the input into four groups.

Spatial Convolution Block: Applies depthwise convolution with a kernel size of (n_chans, 1) to span all EEG channels, effectively learning spatial filters. This is followed by batch normalization and the Swish activation function. A maximum norm constraint of 2 is imposed on the convolution weights to regularize the model.

Temporal Log-Variance Block: Computes the log-variance.

Classification Layer: A fully connected with weight constraint.

Notes

This implementation is not guaranteed to be correct and has not been checked by the original authors; it has only been reimplemented from the paper description and source code [fbmsnetcode]. There is an extra layer here to compute the filterbank during bash time and not on data time. This avoids data-leak, and allows the model to follow the braindecode convention.

- Parameters:

n_bands (int, default=9) – Number of input channels (e.g., number of frequency bands).

n_filters_spat (int, default=36) – Number of output channels from the MixedConv2d layer.

temporal_layer (str, default='LogVarLayer') – Temporal aggregation layer to use.

n_dim (int, default=3) – Dimension of the temporal reduction layer.

stride_factor (int, default=4) – Stride factor for temporal segmentation.

dilatability (int, default=8) – Expansion factor for the spatial convolution block.

activation (nn.Module, default=nn.SiLU) – Activation function class to apply.

kernels_weights (Sequence[int], default=(15, 31, 63, 125)) – Kernel sizes for the MixedConv2d layer.

cnn_max_norm (float, default=2) – Maximum norm constraint for the convolutional layers.

linear_max_norm (float, default=0.5) – Maximum norm constraint for the linear layers.

filter_parameters (dict, default=None) – Dictionary of parameters to use for the FilterBankLayer. If None, a default Chebyshev Type II filter with transition bandwidth of 2 Hz and stop-band ripple of 30 dB will be used.

verbose (bool, default False) – Verbose parameter to create the filter using mne.

References

[fbmsnet]Liu, K., Yang, M., Yu, Z., Wang, G., & Wu, W. (2022). FBMSNet: A filter-bank multi-scale convolutional neural network for EEG-based motor imagery decoding. IEEE Transactions on Biomedical Engineering, 70(2), 436-445.

[fbmsnetcode]Liu, K., Yang, M., Yu, Z., Wang, G., & Wu, W. (2022). FBMSNet: A filter-bank multi-scale convolutional neural network for EEG-based motor imagery decoding. Want2Vanish/FBMSNet

Methods

- forward(x)[source]#

Forward pass of the FBMSNet model.

- Parameters:

x (torch.Tensor) – Input tensor with shape (batch_size, n_chans, n_times).

- Returns:

Output tensor with shape (batch_size, n_outputs).

- Return type: