braindecode.models.CTNet#

- class braindecode.models.CTNet(n_outputs=None, n_chans=None, sfreq=None, chs_info=None, n_times=None, input_window_seconds=None, activation_patch=<class 'torch.nn.modules.activation.ELU'>, activation_transformer=<class 'torch.nn.modules.activation.GELU'>, cnn_drop_prob=0.3, att_positional_drop_prob=0.1, final_drop_prob=0.5, num_heads=4, embed_dim=40, num_layers=6, n_filters_time=None, kernel_size=64, depth_multiplier=2, pool_size_1=8, pool_size_2=8)[source]#

CTNet from Zhao, W et al (2024) [ctnet].

Convolution Attention/Transformer

A Convolutional Transformer Network for EEG-Based Motor Imagery Classification

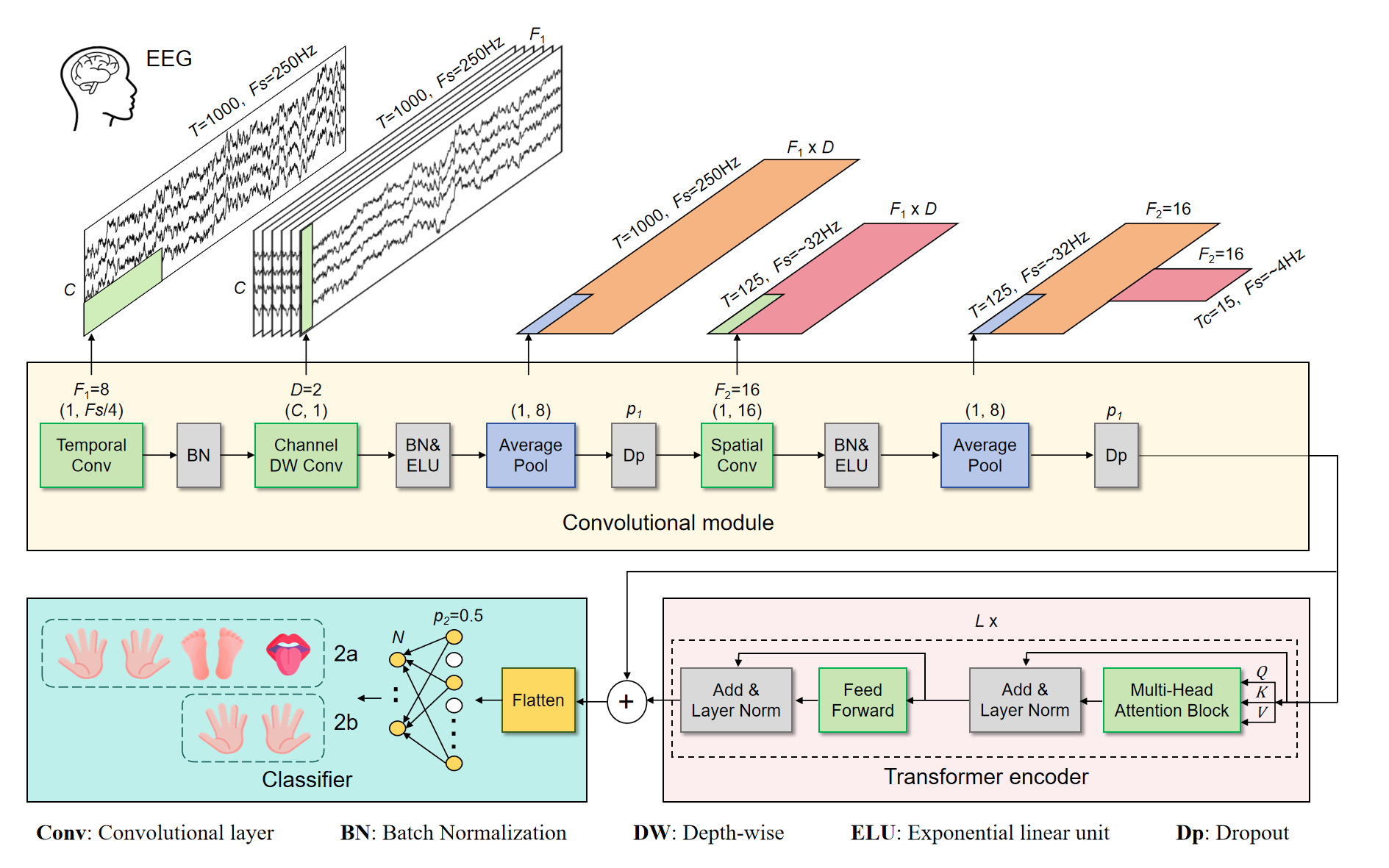

CTNet is an end-to-end neural network architecture designed for classifying motor imagery (MI) tasks from EEG signals. The model combines convolutional neural networks (CNNs) with a Transformer encoder to capture both local and global temporal dependencies in the EEG data.

The architecture consists of three main components:

Convolutional Module:

Apply

EEGNetto perform some feature extraction, denoted here as _PatchEmbeddingEEGNet module.

Transformer Encoder Module:

Utilizes multi-head self-attention mechanisms as EEGConformer but with residual blocks.

Classifier Module:

Combines features from both the convolutional module and the Transformer encoder.

Flattens the combined features and applies dropout for regularization.

Uses a fully connected layer to produce the final classification output.

- Parameters:

activation (nn.Module, default=nn.GELU) – Activation function to use in the network.

num_heads (int, default=4) – Number of attention heads in the Transformer encoder.

embed_dim (int or None, default=None) – Embedding size (dimensionality) for the Transformer encoder.

num_layers (int, default=6) – Number of encoder layers in the Transformer.

n_filters_time (int, default=20) – Number of temporal filters in the first convolutional layer.

kernel_size (int, default=64) – Kernel size for the temporal convolutional layer.

depth_multiplier (int, default=2) – Multiplier for the number of depth-wise convolutional filters.

pool_size_1 (int, default=8) – Pooling size for the first average pooling layer.

pool_size_2 (int, default=8) – Pooling size for the second average pooling layer. cnn_drop_prob: float, default=0.3 Dropout probability after convolutional layers.

att_positional_drop_prob (float, default=0.1) – Dropout probability for the positional encoding in the Transformer.

final_drop_prob (float, default=0.5) – Dropout probability before the final classification layer.

Notes

This implementation is adapted from the original CTNet source code [ctnetcode] to comply with Braindecode’s model standards.

References

[ctnet]Zhao, W., Jiang, X., Zhang, B., Xiao, S., & Weng, S. (2024). CTNet: a convolutional transformer network for EEG-based motor imagery classification. Scientific Reports, 14(1), 20237.

[ctnetcode]Zhao, W., Jiang, X., Zhang, B., Xiao, S., & Weng, S. (2024). CTNet source code: snailpt/CTNet

Methods