braindecode.models.MSVTNet#

- class braindecode.models.MSVTNet(n_chans=None, n_outputs=None, n_times=None, input_window_seconds=None, sfreq=None, chs_info=None, n_filters_list=(9, 9, 9, 9), conv1_kernels_size=(15, 31, 63, 125), conv2_kernel_size=15, depth_multiplier=2, pool1_size=8, pool2_size=7, drop_prob=0.3, num_heads=8, ffn_expansion_factor=1, att_drop_prob=0.5, num_layers=2, activation=<class 'torch.nn.modules.activation.ELU'>, return_features=False)[source]#

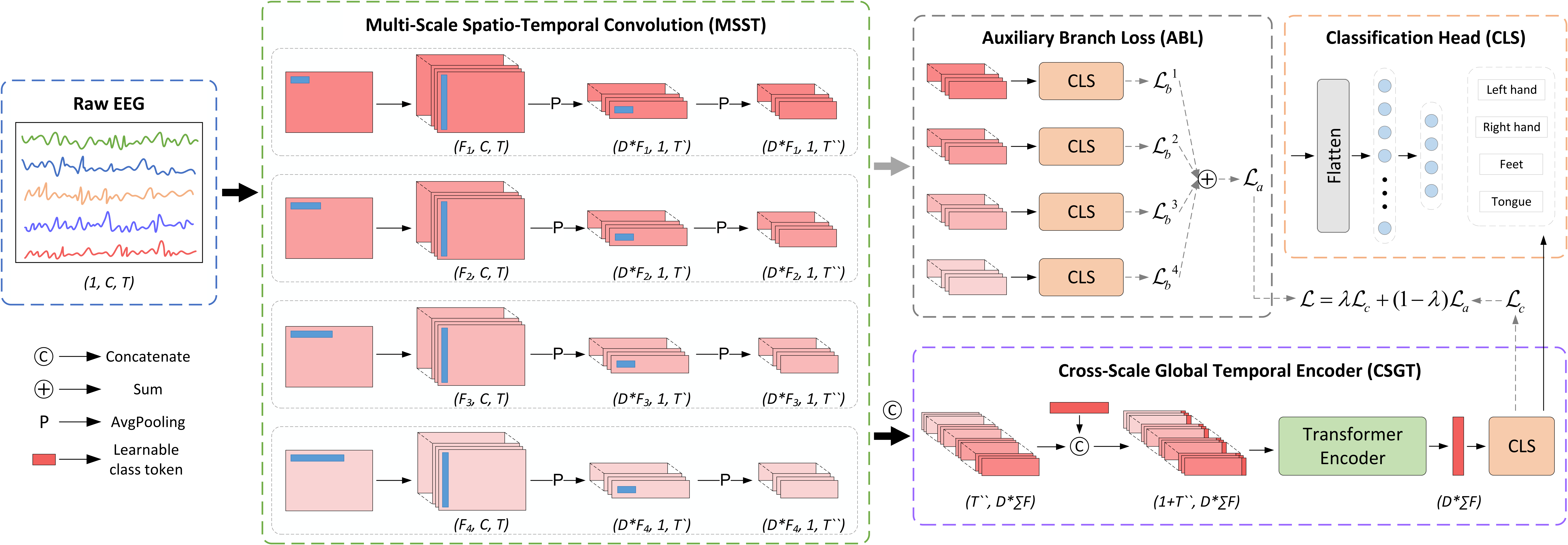

MSVTNet model from Liu K et al (2024) from [msvt2024].

Convolution Recurrent Attention/Transformer

This model implements a multi-scale convolutional transformer network for EEG signal classification, as described in [msvt2024].

- Parameters:

n_filters_list (list[int], optional) – List of filter numbers for each TSConv block, by default (9, 9, 9, 9).

conv1_kernels_size (list[int], optional) – List of kernel sizes for the first convolution in each TSConv block, by default (15, 31, 63, 125).

conv2_kernel_size (int, optional) – Kernel size for the second convolution in TSConv blocks, by default 15.

depth_multiplier (int, optional) – Depth multiplier for depthwise convolution, by default 2.

pool1_size (int, optional) – Pooling size for the first pooling layer in TSConv blocks, by default 8.

pool2_size (int, optional) – Pooling size for the second pooling layer in TSConv blocks, by default 7.

drop_prob (float, optional) – Dropout probability for convolutional layers, by default 0.3.

num_heads (int, optional) – Number of attention heads in the transformer encoder, by default 8.

ffn_expansion_factor (float, optional) – Ratio to compute feedforward dimension in the transformer, by default 1.

att_drop_prob (float, optional) – Dropout probability for the transformer, by default 0.5.

num_layers (int, optional) – Number of transformer encoder layers, by default 2.

activation (Type[nn.Module], optional) – Activation function class to use, by default nn.ELU.

return_features (bool, optional) – Whether to return predictions from branch classifiers, by default False.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented based on the original code [msvt2024code].

References

[msvt2024] (1,2)Liu, K., et al. (2024). MSVTNet: Multi-Scale Vision Transformer Neural Network for EEG-Based Motor Imagery Decoding. IEEE Journal of Biomedical an Health Informatics.

[msvt2024code]Liu, K., et al. (2024). MSVTNet: Multi-Scale Vision Transformer Neural Network for EEG-Based Motor Imagery Decoding. Source Code: SheepTAO/MSVTNet

Methods

- forward(x)[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Return type: