braindecode.models.SleepStagerBlanco2020#

- class braindecode.models.SleepStagerBlanco2020(n_chans=None, sfreq=None, n_conv_chans=20, input_window_seconds=None, n_outputs=5, n_groups=2, max_pool_size=2, drop_prob=0.5, apply_batch_norm=False, return_feats=False, activation=<class 'torch.nn.modules.activation.ReLU'>, chs_info=None, n_times=None)[source]#

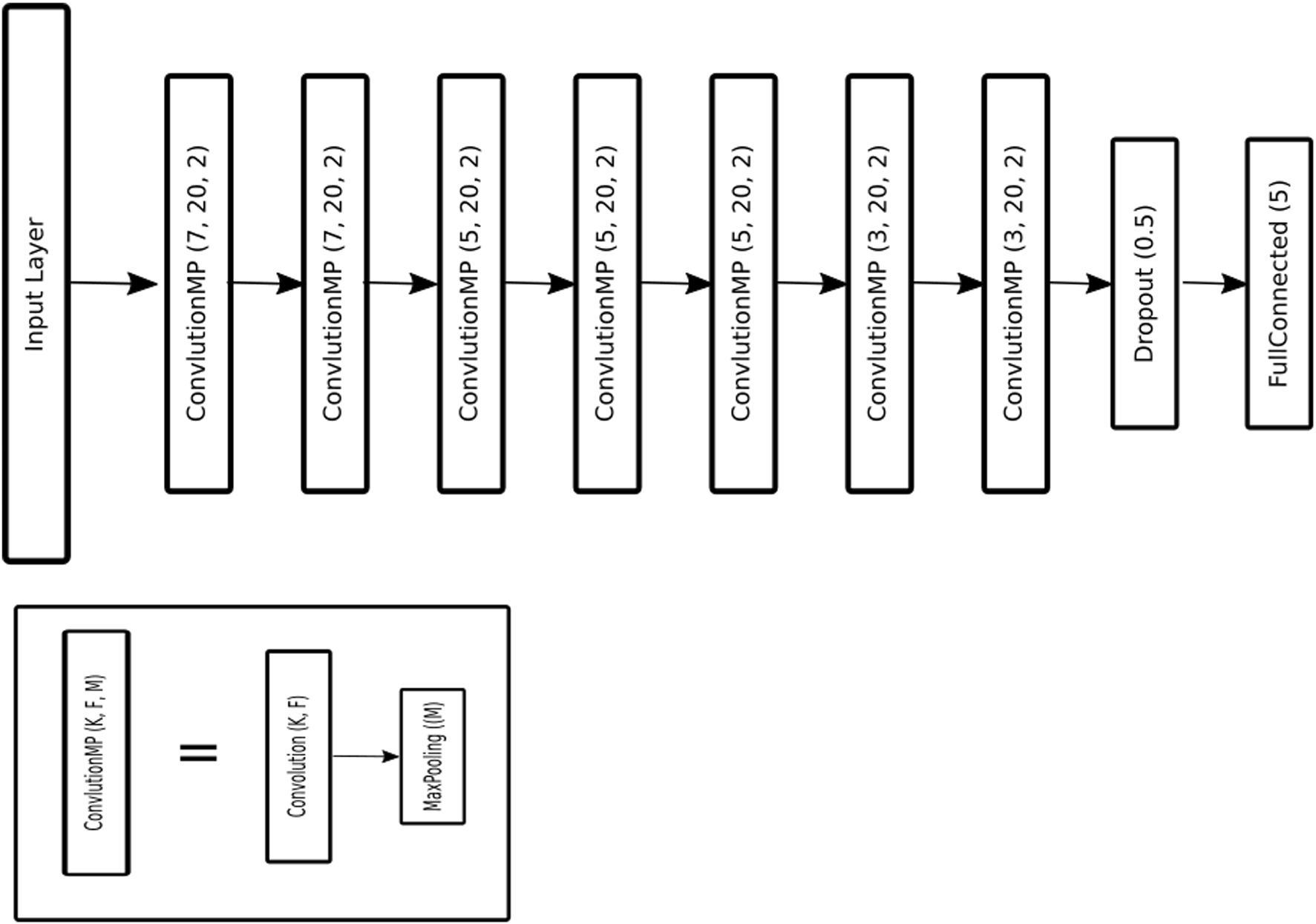

Sleep staging architecture from Blanco et al (2020) from [Blanco2020]

Convolution

Convolutional neural network for sleep staging described in [Blanco2020]. A series of seven convolutional layers with kernel sizes running down from 7 to 3, in an attempt to extract more general features at the beginning, while more specific and complex features were extracted in the final stages.

- Parameters:

n_conv_chans (int) – Number of convolutional channels. Set to 20 in [Blanco2020].

n_groups (int) – Number of groups for the convolution. Set to 2 in [Blanco2020] for 2 Channel EEG. controls the connections between inputs and outputs. n_channels and n_conv_chans must be divisible by n_groups.

drop_prob (float) – Dropout rate before the output dense layer.

apply_batch_norm (bool) – If True, apply batch normalization after both temporal convolutional layers.

return_feats (bool) – If True, return the features, i.e. the output of the feature extractor (before the final linear layer). If False, pass the features through the final linear layer.

n_channels (int) – Alias for n_chans.

n_classes (int) – Alias for n_outputs.

input_size_s (float) – Alias for input_window_seconds.

activation (nn.Module, default=nn.ReLU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ReLU.

References

[Blanco2020] (1,2,3,4)Fernandez-Blanco, E., Rivero, D. & Pazos, A. Convolutional neural networks for sleep stage scoring on a two-channel EEG signal. Soft Comput 24, 4067–4079 (2020). https://doi.org/10.1007/s00500-019-04174-1

Methods