braindecode.models package#

Some predefined network architectures for EEG decoding.

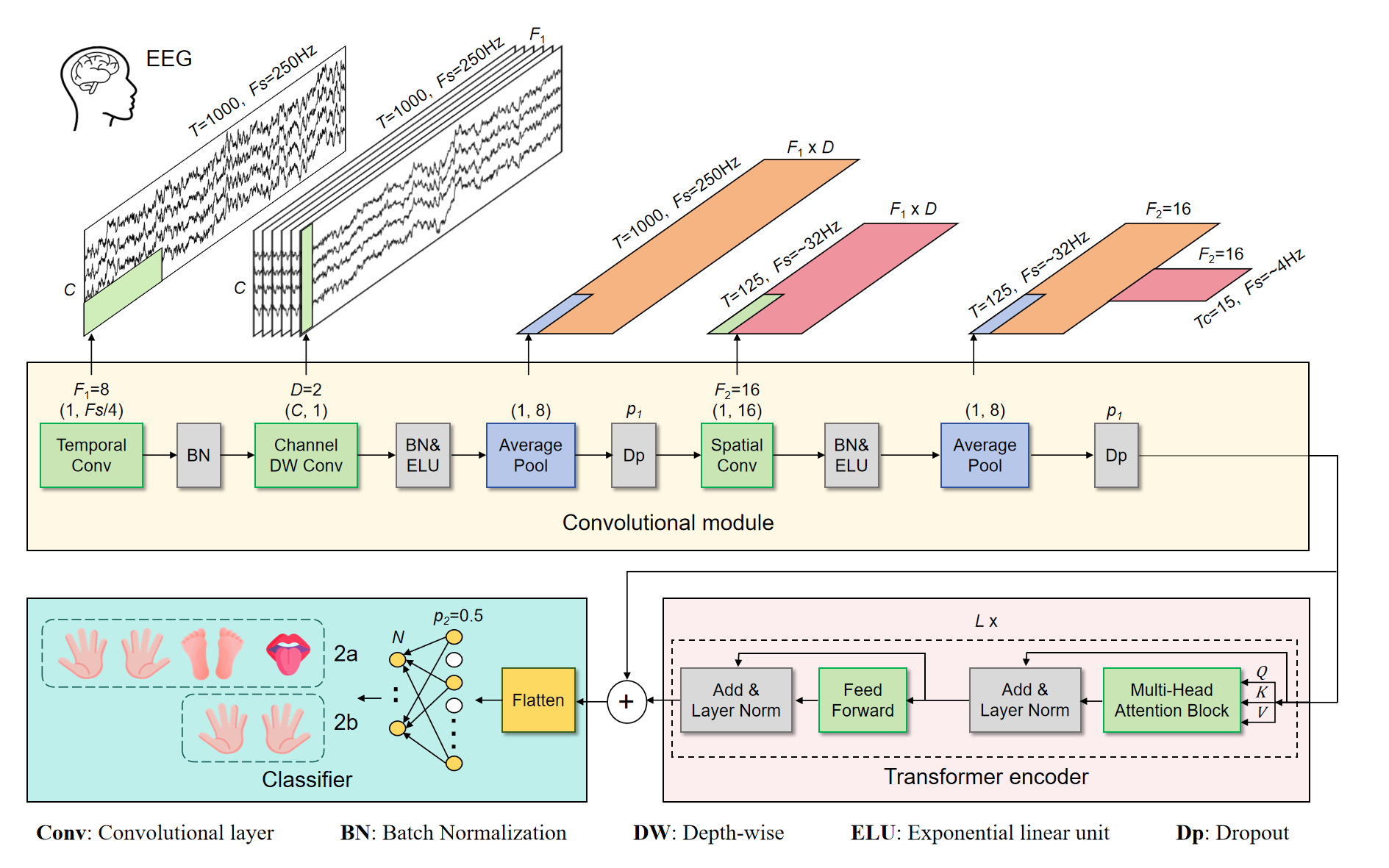

- class braindecode.models.ATCNet(n_chans=None, n_outputs=None, input_window_seconds=None, sfreq=250.0, conv_block_n_filters=16, conv_block_kernel_length_1=64, conv_block_kernel_length_2=16, conv_block_pool_size_1=8, conv_block_pool_size_2=7, conv_block_depth_mult=2, conv_block_dropout=0.3, n_windows=5, att_head_dim=8, att_num_heads=2, att_drop_prob=0.5, tcn_depth=2, tcn_kernel_size=4, tcn_drop_prob=0.3, tcn_activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, concat=False, max_norm_const=0.25, chs_info=None, n_times=None)[source]#

Bases:

EEGModuleMixin,ModuleATCNet model from Altaheri et al. (2022) [1]

Pytorch implementation based on official tensorflow code [2].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

input_window_seconds (float, optional) – Time length of inputs, in seconds. Defaults to 4.5 s, as in BCI-IV 2a dataset.

sfreq (int, optional) – Sampling frequency of the inputs, in Hz. Default to 250 Hz, as in BCI-IV 2a dataset.

conv_block_n_filters (int) – Number temporal filters in the first convolutional layer of the convolutional block, denoted F1 in figure 2 of the paper [1]. Defaults to 16 as in [1].

conv_block_kernel_length_1 (int) – Length of temporal filters in the first convolutional layer of the convolutional block, denoted Kc in table 1 of the paper [1]. Defaults to 64 as in [1].

conv_block_kernel_length_2 (int) – Length of temporal filters in the last convolutional layer of the convolutional block. Defaults to 16 as in [1].

conv_block_pool_size_1 (int) – Length of first average pooling kernel in the convolutional block. Defaults to 8 as in [1].

conv_block_pool_size_2 (int) – Length of first average pooling kernel in the convolutional block, denoted P2 in table 1 of the paper [1]. Defaults to 7 as in [1].

conv_block_depth_mult (int) – Depth multiplier of depthwise convolution in the convolutional block, denoted D in table 1 of the paper [1]. Defaults to 2 as in [1].

conv_block_dropout (float) – Dropout probability used in the convolution block, denoted pc in table 1 of the paper [1]. Defaults to 0.3 as in [1].

n_windows (int) – Number of sliding windows, denoted n in [1]. Defaults to 5 as in [1].

att_head_dim (int) – Embedding dimension used in each self-attention head, denoted dh in table 1 of the paper [1]. Defaults to 8 as in [1].

att_num_heads (int) – Number of attention heads, denoted H in table 1 of the paper [1]. Defaults to 2 as in [1].

att_drop_prob – The description is missing.

tcn_depth (int) – Depth of Temporal Convolutional Network block (i.e. number of TCN Residual blocks), denoted L in table 1 of the paper [1]. Defaults to 2 as in [1].

tcn_kernel_size (int) – Temporal kernel size used in TCN block, denoted Kt in table 1 of the paper [1]. Defaults to 4 as in [1].

tcn_drop_prob – The description is missing.

tcn_activation (torch.nn.Module) – Nonlinear activation to use. Defaults to nn.ELU().

concat (bool) – When

True, concatenates each slidding window embedding before feeding it to a fully-connected layer, as done in [1]. WhenFalse, maps each slidding window to n_outputs logits and average them. Defaults toFalsecontrary to what is reported in [1], but matching what the official code does [2].max_norm_const (float) – Maximum L2-norm constraint imposed on weights of the last fully-connected layer. Defaults to 0.25.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[1] (1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25)H. Altaheri, G. Muhammad and M. Alsulaiman, Physics-informed attention temporal convolutional network for EEG-based motor imagery classification in IEEE Transactions on Industrial Informatics, 2022, doi: 10.1109/TII.2022.3197419.

- forward(X)[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Parameters:

X – The description is missing.

- class braindecode.models.AttentionBaseNet(n_times=None, n_chans=None, n_outputs=None, chs_info=None, sfreq=None, input_window_seconds=None, n_temporal_filters: int = 40, temp_filter_length_inp: int = 25, spatial_expansion: int = 1, pool_length_inp: int = 75, pool_stride_inp: int = 15, drop_prob_inp: float = 0.5, ch_dim: int = 16, temp_filter_length: int = 15, pool_length: int = 8, pool_stride: int = 8, drop_prob_attn: float = 0.5, attention_mode: str | None = None, reduction_rate: int = 4, use_mlp: bool = False, freq_idx: int = 0, n_codewords: int = 4, kernel_size: int = 9, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, extra_params: bool = False)[source]#

Bases:

EEGModuleMixin,ModuleAttentionBaseNet from Wimpff M et al. (2023) [Martin2023].

Neural Network from the paper: EEG motor imagery decoding: A framework for comparative analysis with channel attention mechanisms

The paper and original code with more details about the methodological choices are available at the [Martin2023] and [MartinCode].

The AttentionBaseNet architecture is composed of four modules: - Input Block that performs a temporal convolution and a spatial convolution. - Channel Expansion that modifies the number of channels. - An attention block that performs channel attention with several options - ClassificationHead

Added in version 0.9.

- Parameters:

n_times (int) – Number of time samples of the input window.

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.sfreq (float) – Sampling frequency of the EEG recordings.

input_window_seconds (float) – Length of the input window in seconds.

n_temporal_filters (int, optional) – Number of temporal convolutional filters in the first layer. This defines the number of output channels after the temporal convolution. Default is 40.

temp_filter_length_inp – The description is missing.

spatial_expansion (int, optional) – Multiplicative factor to expand the spatial dimensions. Used to increase the capacity of the model by expanding spatial features. Default is 1.

pool_length_inp (int, optional) – Length of the pooling window in the input layer. Determines how much temporal information is aggregated during pooling. Default is 75.

pool_stride_inp (int, optional) – Stride of the pooling operation in the input layer. Controls the downsampling factor in the temporal dimension. Default is 15.

drop_prob_inp (float, optional) – Dropout rate applied after the input layer. This is the probability of zeroing out elements during training to prevent overfitting. Default is 0.5.

ch_dim (int, optional) – Number of channels in the subsequent convolutional layers. This controls the depth of the network after the initial layer. Default is 16.

temp_filter_length (int, default=15) – The length of the temporal filters in the convolutional layers.

pool_length (int, default=8) – The length of the window for the average pooling operation.

pool_stride (int, default=8) – The stride of the average pooling operation.

drop_prob_attn (float, default=0.5) – The dropout rate for regularization for the attention layer. Values should be between 0 and 1.

attention_mode (str, optional) – The type of attention mechanism to apply. If None, no attention is applied. - “se” for Squeeze-and-excitation network - “gsop” for Global Second-Order Pooling - “fca” for Frequency Channel Attention Network - “encnet” for context encoding module - “eca” for Efficient channel attention for deep convolutional neural networks - “ge” for Gather-Excite - “gct” for Gated Channel Transformation - “srm” for Style-based Recalibration Module - “cbam” for Convolutional Block Attention Module - “cat” for Learning to collaborate channel and temporal attention from multi-information fusion - “catlite” for Learning to collaborate channel attention from multi-information fusion (lite version, cat w/o temporal attention)

reduction_rate (int, default=4) – The reduction rate used in the attention mechanism to reduce dimensionality and computational complexity.

use_mlp (bool, default=False) – Flag to indicate whether an MLP (Multi-Layer Perceptron) should be used within the attention mechanism for further processing.

freq_idx (int, default=0) – DCT index used in fca attention mechanism.

n_codewords (int, default=4) – The number of codewords (clusters) used in attention mechanisms that employ quantization or clustering strategies.

kernel_size (int, default=9) – The kernel size used in certain types of attention mechanisms for convolution operations.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.extra_params (bool, default=False) – Flag to indicate whether additional, custom parameters should be passed to the attention mechanism.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[Martin2023] (1,2)Wimpff, M., Gizzi, L., Zerfowski, J. and Yang, B., 2023. EEG motor imagery decoding: A framework for comparative analysis with channel attention mechanisms. arXiv preprint arXiv:2310.11198.

[MartinCode]Wimpff, M., Gizzi, L., Zerfowski, J. and Yang, B. GitHub martinwimpff/channel-attention (accessed 2024-03-28)

- forward(x)[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Parameters:

x – The description is missing.

- class braindecode.models.BDTCN(n_chans=None, n_outputs=None, chs_info=None, n_times=None, sfreq=None, input_window_seconds=None, n_blocks=3, n_filters=30, kernel_size=5, drop_prob=0.5, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ReLU'>)[source]#

Bases:

EEGModuleMixin,ModuleBraindecode TCN from Gemein, L et al (2020) [gemein2020].

See [gemein2020] for details.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

sfreq (float) – Sampling frequency of the EEG recordings.

input_window_seconds (float) – Length of the input window in seconds.

n_blocks (int) – number of temporal blocks in the network

n_filters (int) – number of output filters of each convolution

kernel_size (int) – kernel size of the convolutions

drop_prob (float) – dropout probability

activation (nn.Module, default=nn.ReLU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ReLU.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

- forward(x)[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Parameters:

x – The description is missing.

- class braindecode.models.BIOT(emb_size=256, att_num_heads=8, n_layers=4, sfreq=200, hop_length=100, return_feature=False, n_outputs=None, n_chans=None, chs_info=None, n_times=None, input_window_seconds=None, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, drop_prob: float = 0.5, max_seq_len: int = 1024, attn_dropout=0.2, attn_layer_dropout=0.2)[source]#

Bases:

EEGModuleMixin,ModuleBIOT from Yang et al. (2023) [Yang2023]

BIOT: Cross-data Biosignal Learning in the Wild.

BIOT is a large language model for biosignal classification. It is a wrapper around the BIOTEncoder and ClassificationHead modules.

It is designed for N-dimensional biosignal data such as EEG, ECG, etc. The method was proposed by Yang et al. [Yang2023] and the code is available at [Code2023]

The model is trained with a contrastive loss on large EEG datasets TUH Abnormal EEG Corpus with 400K samples and Sleep Heart Health Study 5M. Here, we only provide the model architecture, not the pre-trained weights or contrastive loss training.

The architecture is based on the LinearAttentionTransformer and PatchFrequencyEmbedding modules. The BIOTEncoder is a transformer that takes the input data and outputs a fixed-size representation of the input data. More details are present in the BIOTEncoder class.

The ClassificationHead is an ELU activation layer, followed by a simple linear layer that takes the output of the BIOTEncoder and outputs the classification probabilities.

Added in version 0.9.

- Parameters:

emb_size (int, optional) – The size of the embedding layer, by default 256

att_num_heads (int, optional) – The number of attention heads, by default 8

n_layers (int, optional) – The number of transformer layers, by default 4

sfreq (int, optional) – The sfreq parameter for the encoder. The default is 200

hop_length (int, optional) – The hop length for the torch.stft transformation in the encoder. The default is 100.

return_feature (bool, optional) – Changing the output for the neural network. Default is single tensor when return_feature is True, return embedding space too. Default is False.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.drop_prob – The description is missing.

max_seq_len – The description is missing.

attn_dropout – The description is missing.

attn_layer_dropout – The description is missing.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[Yang2023] (1,2)Yang, C., Westover, M.B. and Sun, J., 2023, November. BIOT: Biosignal Transformer for Cross-data Learning in the Wild. In Thirty-seventh Conference on Neural Information Processing Systems, NeurIPS.

[Code2023]Yang, C., Westover, M.B. and Sun, J., 2023. BIOT Biosignal Transformer for Cross-data Learning in the Wild. GitHub ycq091044/BIOT (accessed 2024-02-13)

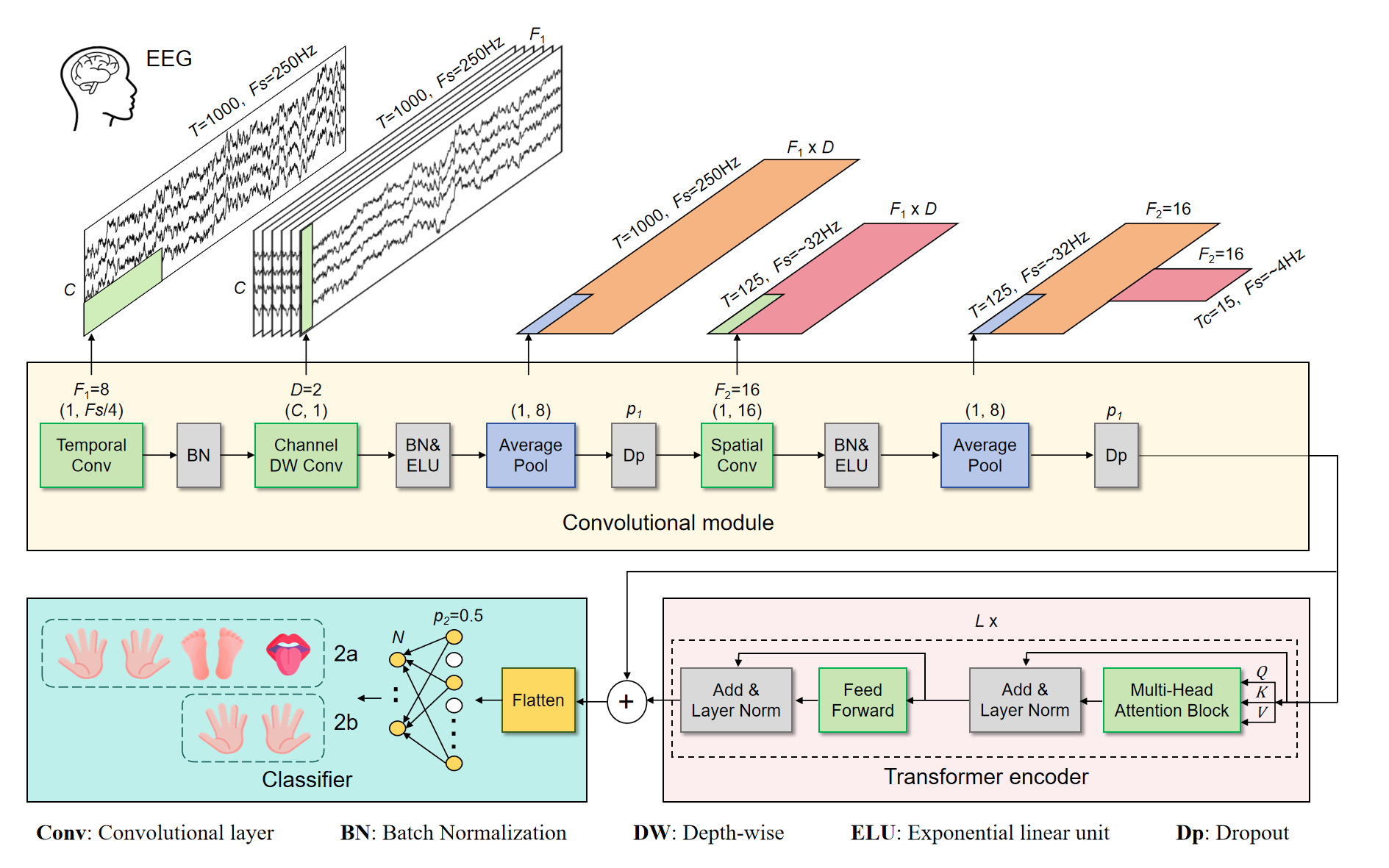

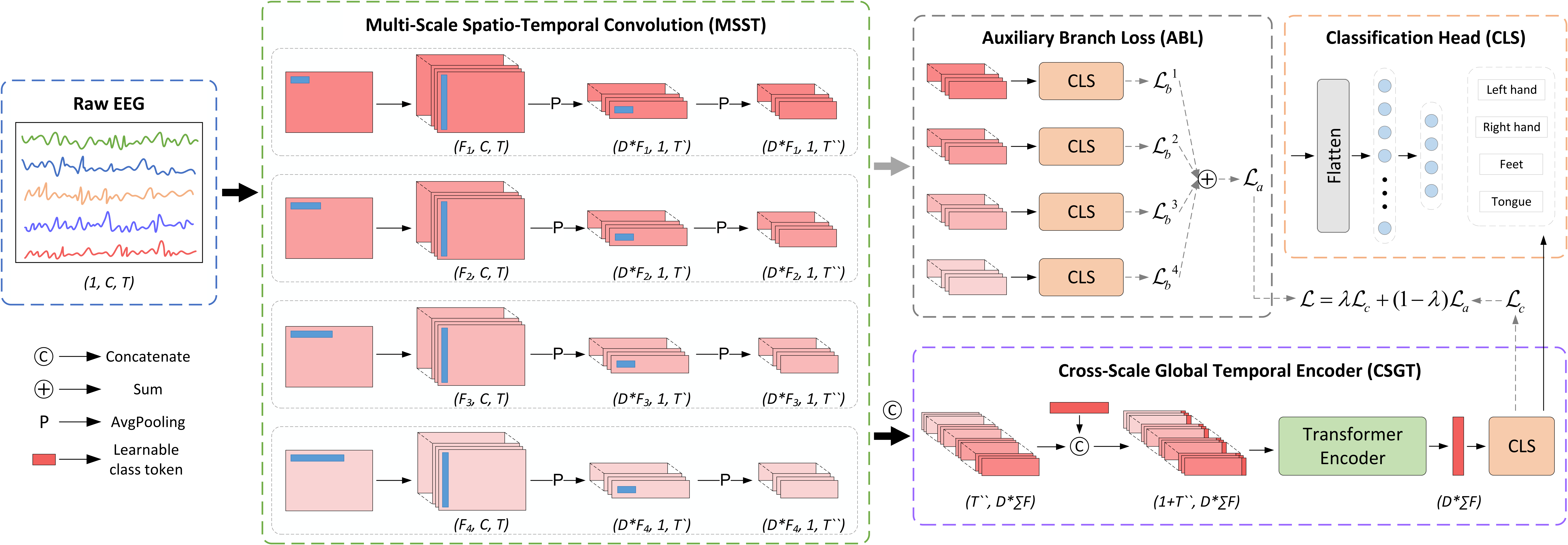

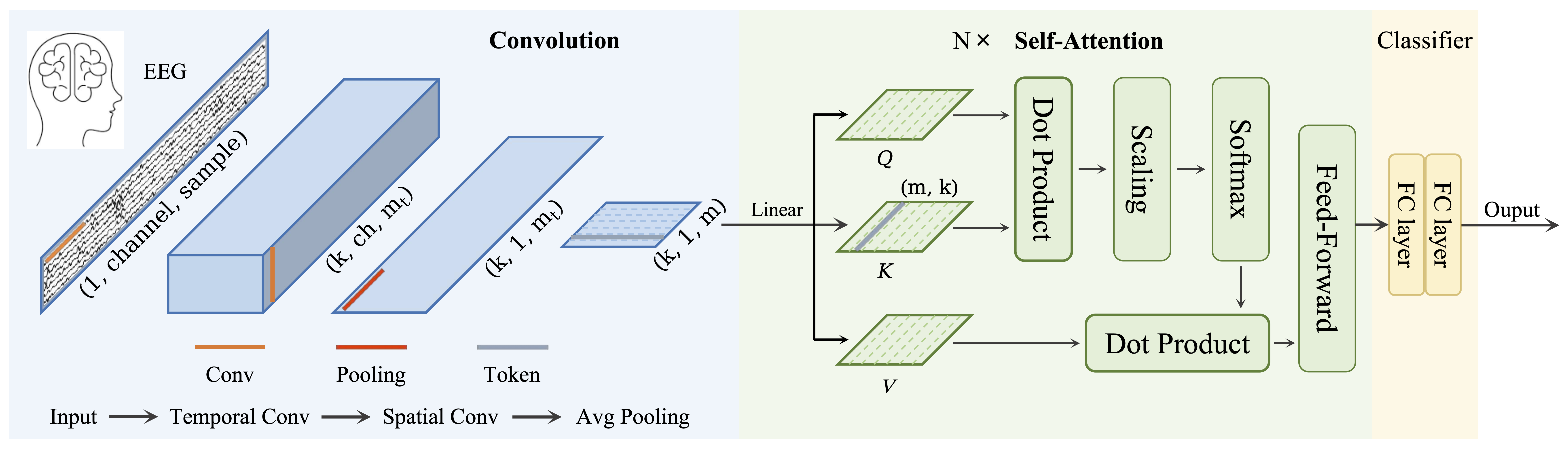

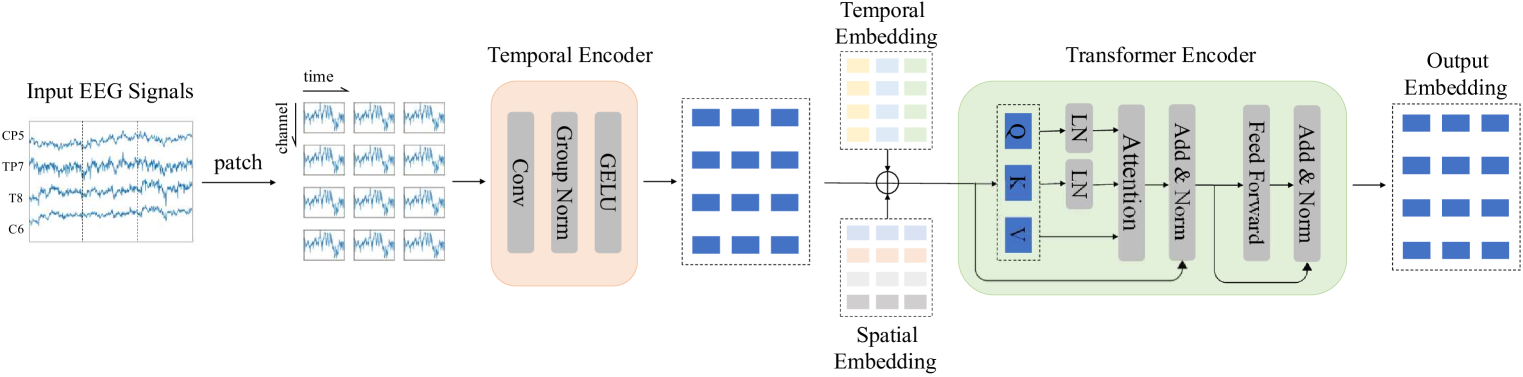

- class braindecode.models.CTNet(n_outputs=None, n_chans=None, sfreq=None, chs_info=None, n_times=None, input_window_seconds=None, activation_patch: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, activation_transformer: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.GELU'>, drop_prob_cnn: float = 0.3, drop_prob_posi: float = 0.1, drop_prob_final: float = 0.5, heads: int = 4, emb_size: int | None = 40, depth: int = 6, n_filters_time: int | None = None, kernel_size: int = 64, depth_multiplier: int | None = 2, pool_size_1: int = 8, pool_size_2: int = 8)[source]#

Bases:

EEGModuleMixin,ModuleCTNet from Zhao, W et al (2024) [ctnet].

A Convolutional Transformer Network for EEG-Based Motor Imagery Classification

CTNet is an end-to-end neural network architecture designed for classifying motor imagery (MI) tasks from EEG signals. The model combines convolutional neural networks (CNNs) with a Transformer encoder to capture both local and global temporal dependencies in the EEG data.

The architecture consists of three main components:

- Convolutional Module:

Apply EEGNetV4 to perform some feature extraction, denoted here as

_PatchEmbeddingEEGNet module.

- Transformer Encoder Module:

Utilizes multi-head self-attention mechanisms as EEGConformer but

with residual blocks.

- Classifier Module:

Combines features from both the convolutional module

and the Transformer encoder. - Flattens the combined features and applies dropout for regularization. - Uses a fully connected layer to produce the final classification output.

- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

sfreq (float) – Sampling frequency of the EEG recordings.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

activation_patch – The description is missing.

activation_transformer – The description is missing.

drop_prob_cnn (float, default=0.3) – Dropout probability after convolutional layers.

drop_prob_posi (float, default=0.1) – Dropout probability for the positional encoding in the Transformer.

drop_prob_final (float, default=0.5) – Dropout probability before the final classification layer.

heads (int, default=4) – Number of attention heads in the Transformer encoder.

emb_size (int or None, default=None) – Embedding size (dimensionality) for the Transformer encoder.

depth (int, default=6) – Number of encoder layers in the Transformer.

n_filters_time (int, default=20) – Number of temporal filters in the first convolutional layer.

kernel_size (int, default=64) – Kernel size for the temporal convolutional layer.

depth_multiplier (int, default=2) – Multiplier for the number of depth-wise convolutional filters.

pool_size_1 (int, default=8) – Pooling size for the first average pooling layer.

pool_size_2 (int, default=8) – Pooling size for the second average pooling layer.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is adapted from the original CTNet source code [ctnetcode] to comply with Braindecode’s model standards.

References

[ctnet]Zhao, W., Jiang, X., Zhang, B., Xiao, S., & Weng, S. (2024). CTNet: a convolutional transformer network for EEG-based motor imagery classification. Scientific Reports, 14(1), 20237.

[ctnetcode]Zhao, W., Jiang, X., Zhang, B., Xiao, S., & Weng, S. (2024). CTNet source code: snailpt/CTNet

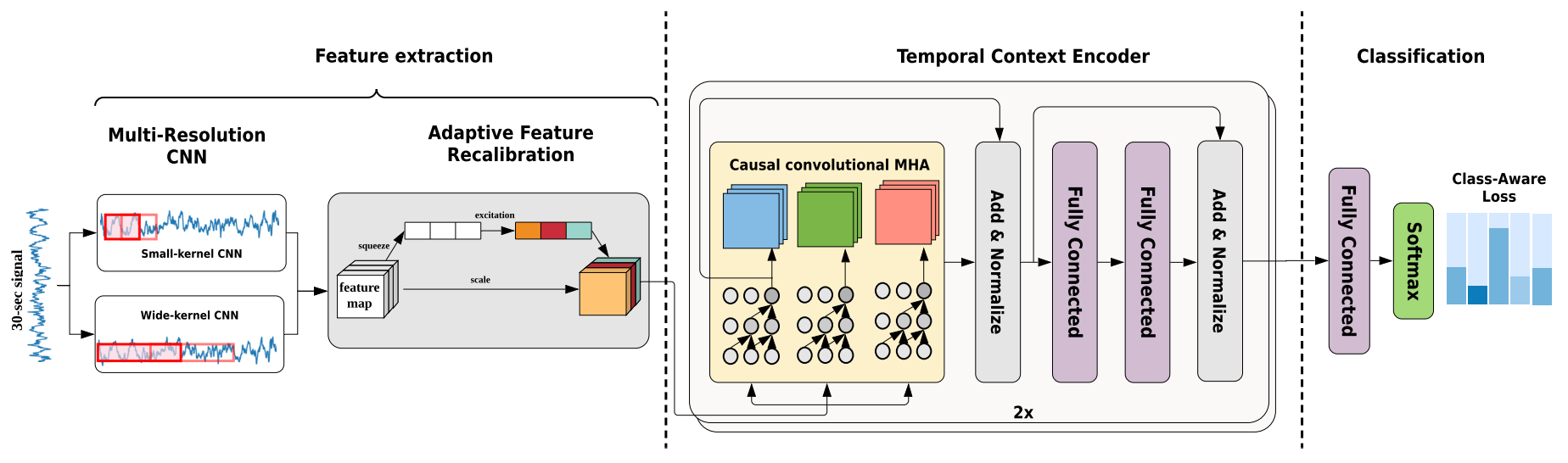

- class braindecode.models.ContraWR(n_chans=None, n_outputs=None, sfreq=None, emb_size: int = 256, res_channels: list[int] = [32, 64, 128], steps=20, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, drop_prob: float = 0.5, stride_res: int = 2, kernel_size_res: int = 3, padding_res: int = 1, chs_info=None, n_times=None, input_window_seconds=None)[source]#

Bases:

EEGModuleMixin,ModuleContrast with the World Representation ContraWR from Yang et al (2021) [Yang2021].

This model is a convolutional neural network that uses a spectral representation with a series of convolutional layers and residual blocks. The model is designed to learn a representation of the EEG signal that can be used for sleep staging.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

sfreq (float) – Sampling frequency of the EEG recordings.

emb_size (int, optional) – Embedding size for the final layer, by default 256.

res_channels (list[int], optional) – Number of channels for each residual block, by default [32, 64, 128].

steps (int, optional) – Number of steps to take the frequency decomposition hop_length parameters by default 20.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.drop_prob (float, default=0.5) – The dropout rate for regularization. Values should be between 0 and 1.

versionadded: (..) – 0.9:

stride_res – The description is missing.

kernel_size_res – The description is missing.

padding_res – The description is missing.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors. The modifications are minimal and the model is expected to work as intended. the original code from [Code2023].

References

[Yang2021]Yang, C., Xiao, C., Westover, M. B., & Sun, J. (2023). Self-supervised electroencephalogram representation learning for automatic sleep staging: model development and evaluation study. JMIR AI, 2(1), e46769.

[Code2023]Yang, C., Westover, M.B. and Sun, J., 2023. BIOT Biosignal Transformer for Cross-data Learning in the Wild. GitHub ycq091044/BIOT (accessed 2024-02-13)

- class braindecode.models.Deep4Net(n_chans=None, n_outputs=None, n_times=None, final_conv_length='auto', n_filters_time=25, n_filters_spat=25, filter_time_length=10, pool_time_length=3, pool_time_stride=3, n_filters_2=50, filter_length_2=10, n_filters_3=100, filter_length_3=10, n_filters_4=200, filter_length_4=10, activation_first_conv_nonlin: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, first_pool_mode='max', first_pool_nonlin: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.linear.Identity'>, activation_later_conv_nonlin: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, later_pool_mode='max', later_pool_nonlin: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.linear.Identity'>, drop_prob=0.5, split_first_layer=True, batch_norm=True, batch_norm_alpha=0.1, stride_before_pool=False, chs_info=None, input_window_seconds=None, sfreq=None)[source]#

Bases:

EEGModuleMixin,SequentialDeep ConvNet model from Schirrmeister et al (2017) [Schirrmeister2017].

Model described in [Schirrmeister2017].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

final_conv_length (int | str) – Length of the final convolution layer. If set to “auto”, n_times must not be None. Default: “auto”.

n_filters_time (int) – Number of temporal filters.

n_filters_spat (int) – Number of spatial filters.

filter_time_length (int) – Length of the temporal filter in layer 1.

pool_time_length (int) – Length of temporal pooling filter.

pool_time_stride (int) – Length of stride between temporal pooling filters.

n_filters_2 (int) – Number of temporal filters in layer 2.

filter_length_2 (int) – Length of the temporal filter in layer 2.

n_filters_3 (int) – Number of temporal filters in layer 3.

filter_length_3 (int) – Length of the temporal filter in layer 3.

n_filters_4 (int) – Number of temporal filters in layer 4.

filter_length_4 (int) – Length of the temporal filter in layer 4.

activation_first_conv_nonlin (nn.Module, default is nn.ELU) – Non-linear activation function to be used after convolution in layer 1.

first_pool_mode (str) – Pooling mode in layer 1. “max” or “mean”.

first_pool_nonlin (callable) – Non-linear activation function to be used after pooling in layer 1.

activation_later_conv_nonlin (nn.Module, default is nn.ELU) – Non-linear activation function to be used after convolution in later layers.

later_pool_mode (str) – Pooling mode in later layers. “max” or “mean”.

later_pool_nonlin (callable) – Non-linear activation function to be used after pooling in later layers.

drop_prob (float) – Dropout probability.

split_first_layer (bool) – Split first layer into temporal and spatial layers (True) or just use temporal (False). There would be no non-linearity between the split layers.

batch_norm (bool) – Whether to use batch normalisation.

batch_norm_alpha (float) – Momentum for BatchNorm2d.

stride_before_pool (bool) – Stride before pooling.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[Schirrmeister2017] (1,2)Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., Hutter, F. & Ball, T. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Human Brain Mapping , Aug. 2017. Online: http://dx.doi.org/10.1002/hbm.23730

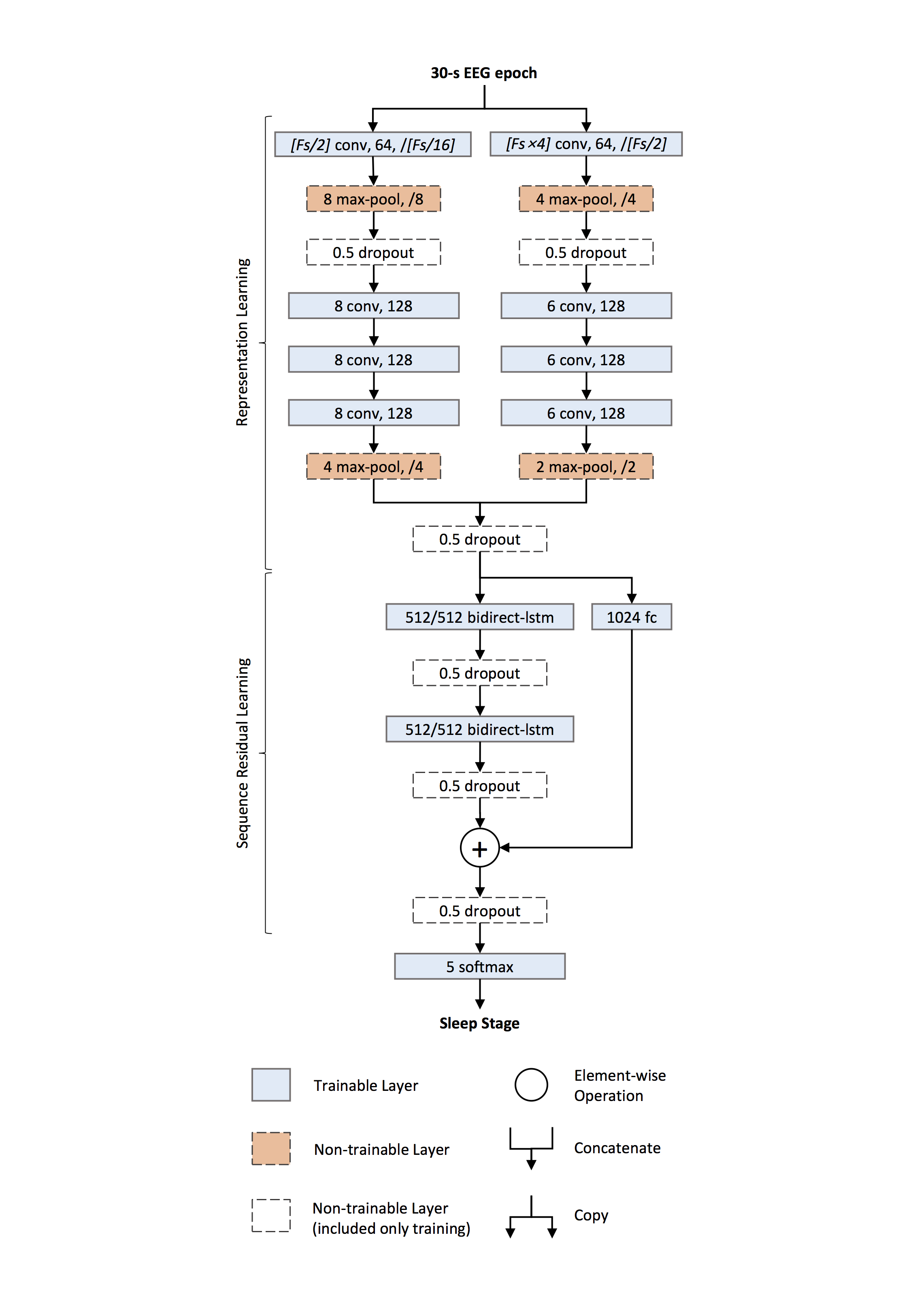

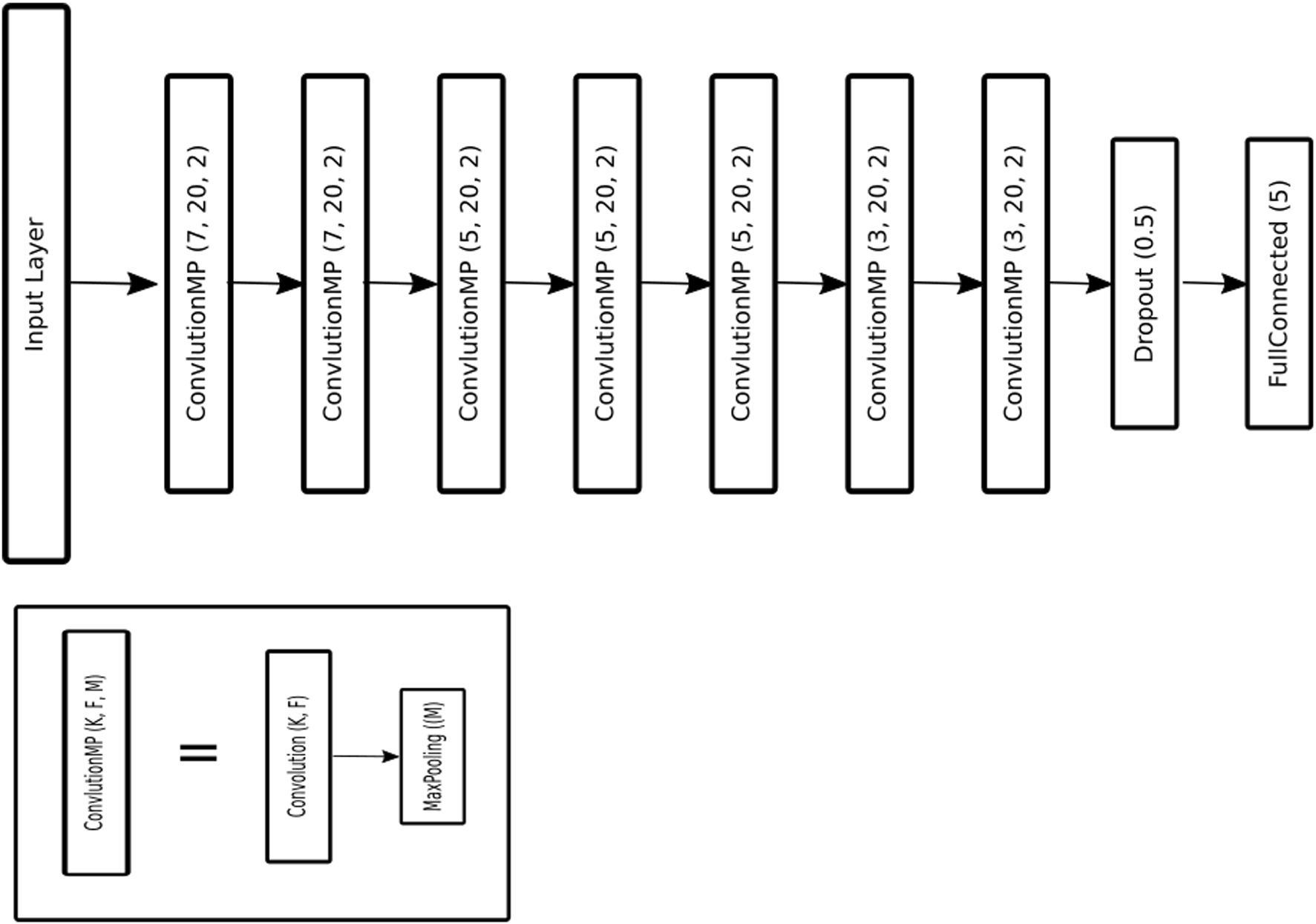

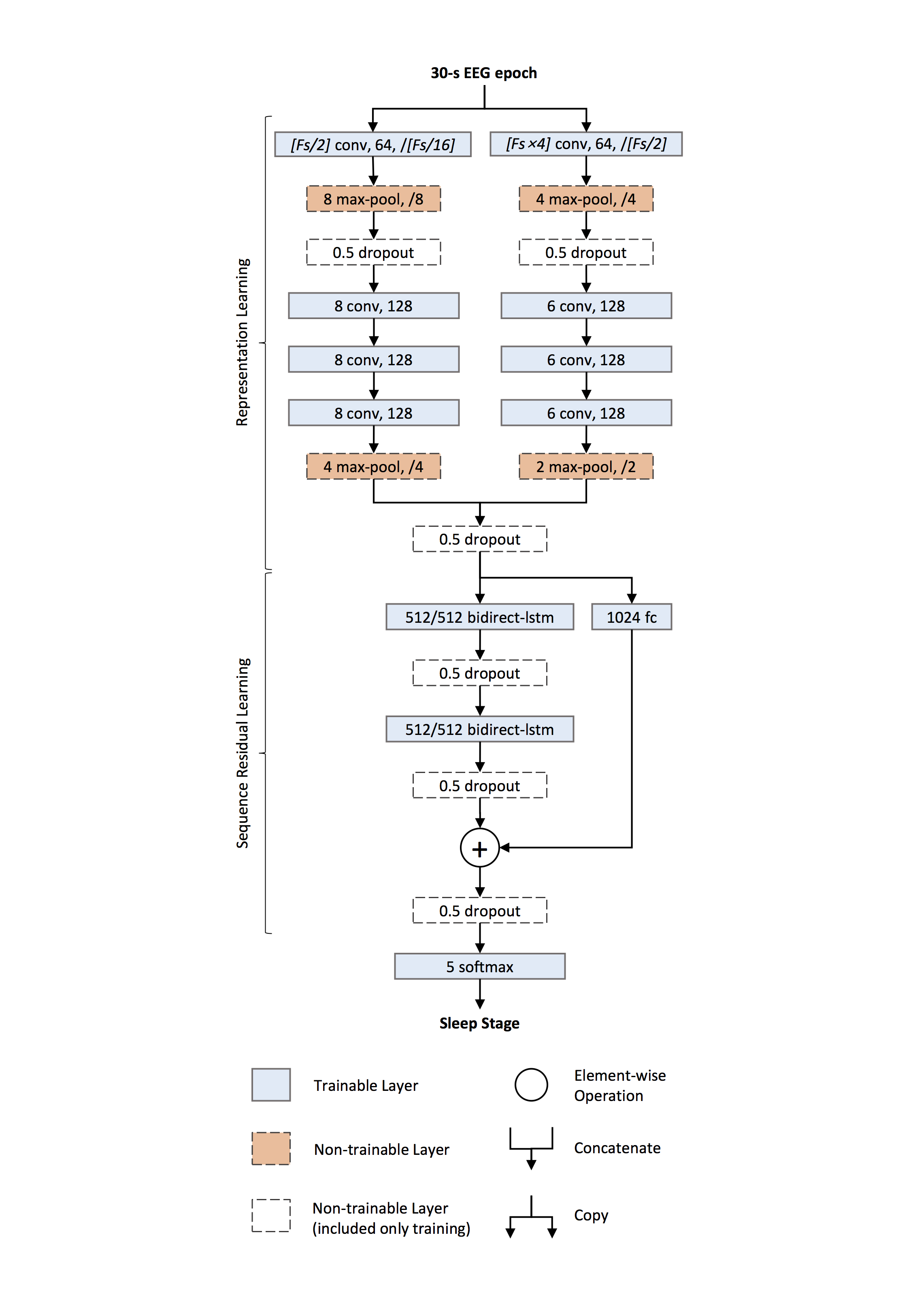

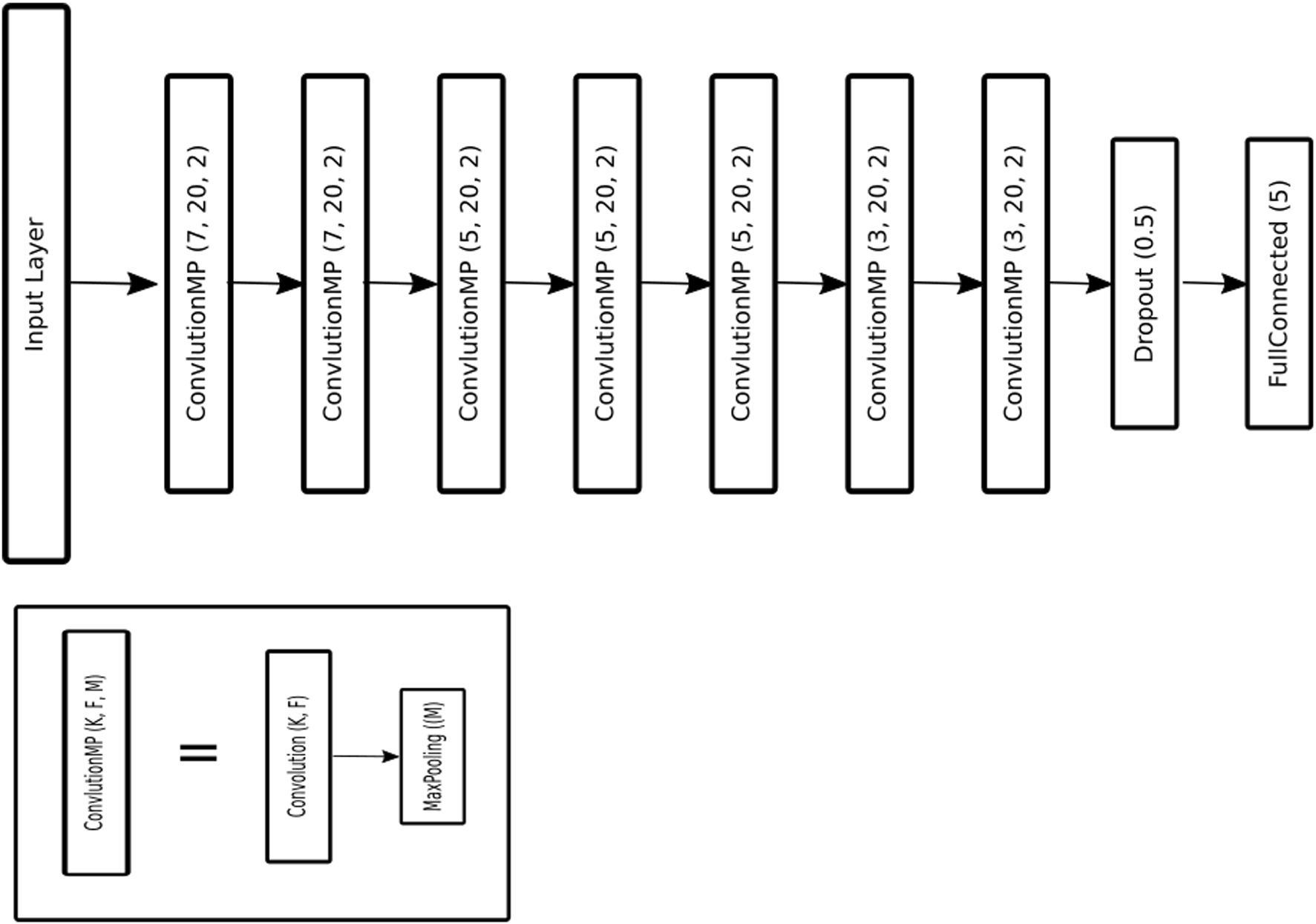

- class braindecode.models.DeepSleepNet(n_outputs=5, return_feats=False, n_chans=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, activation_large: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, activation_small: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ReLU'>, drop_prob: float = 0.5)[source]#

Bases:

EEGModuleMixin,ModuleSleep staging architecture from Supratak et al. (2017) [Supratak2017].

Convolutional neural network and bidirectional-Long Short-Term for single channels sleep staging described in [Supratak2017].

- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

return_feats (bool) – If True, return the features, i.e. the output of the feature extractor (before the final linear layer). If False, pass the features through the final linear layer.

n_chans (int) – Number of EEG channels.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

activation_large (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.activation_small (nn.Module, default=nn.ReLU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ReLU.drop_prob (float, default=0.5) – The dropout rate for regularization. Values should be between 0 and 1.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

- forward(x)[source]#

Forward pass.

- Parameters:

x (torch.Tensor) – Batch of EEG windows of shape (batch_size, n_channels, n_times).

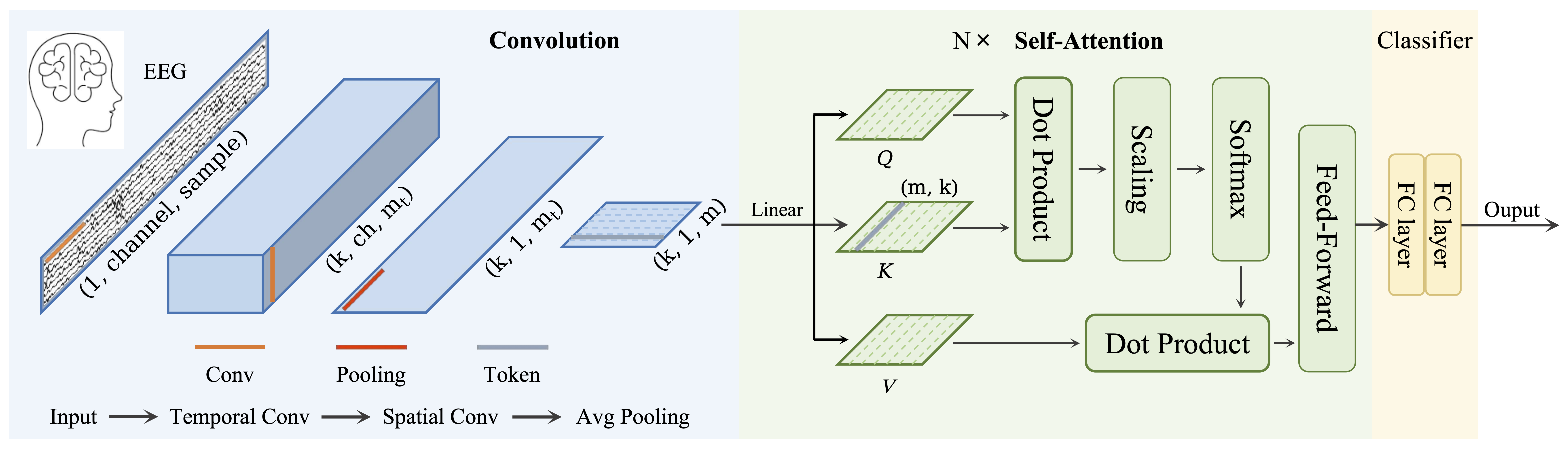

- class braindecode.models.EEGConformer(n_outputs=None, n_chans=None, n_filters_time=40, filter_time_length=25, pool_time_length=75, pool_time_stride=15, drop_prob=0.5, att_depth=6, att_heads=10, att_drop_prob=0.5, final_fc_length='auto', return_features=False, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, activation_transfor: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.GELU'>, n_times=None, chs_info=None, input_window_seconds=None, sfreq=None)[source]#

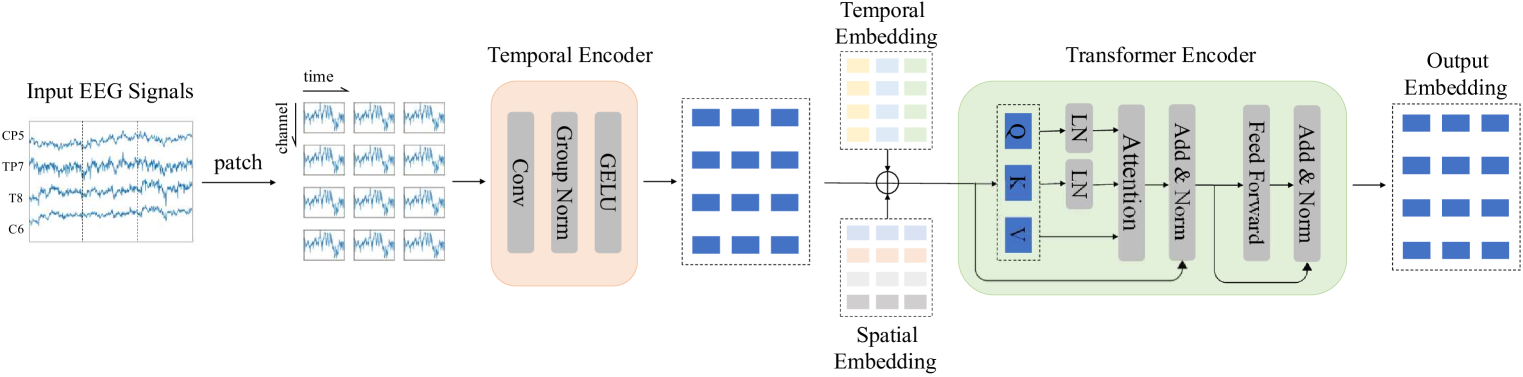

Bases:

EEGModuleMixin,ModuleEEG Conformer from Song et al. (2022) from [song2022].

Convolutional Transformer for EEG decoding.

The paper and original code with more details about the methodological choices are available at the [song2022] and [ConformerCode].

This neural network architecture receives a traditional braindecode input. The input shape should be three-dimensional matrix representing the EEG signals.

(batch_size, n_channels, n_timesteps).

- The EEG Conformer architecture is composed of three modules:

PatchEmbedding

TransformerEncoder

ClassificationHead

- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

n_filters_time (int) – Number of temporal filters, defines also embedding size.

filter_time_length (int) – Length of the temporal filter.

pool_time_length (int) – Length of temporal pooling filter.

pool_time_stride (int) – Length of stride between temporal pooling filters.

drop_prob (float) – Dropout rate of the convolutional layer.

att_depth (int) – Number of self-attention layers.

att_heads (int) – Number of attention heads.

att_drop_prob (float) – Dropout rate of the self-attention layer.

final_fc_length (int | str) – The dimension of the fully connected layer.

return_features (bool) – If True, the forward method returns the features before the last classification layer. Defaults to False.

activation (nn.Module) – Activation function as parameter. Default is nn.ELU

activation_transfor (nn.Module) – Activation function as parameter, applied at the FeedForwardBlock module inside the transformer. Default is nn.GeLU

n_times (int) – Number of time samples of the input window.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

The authors recommend using data augmentation before using Conformer, e.g. segmentation and recombination, Please refer to the original paper and code for more details.

The model was initially tuned on 4 seconds of 250 Hz data. Please adjust the scale of the temporal convolutional layer, and the pooling layer for better performance.

Added in version 0.8.

We aggregate the parameters based on the parts of the models, or when the parameters were used first, e.g. n_filters_time.

References

[song2022] (1,2)Song, Y., Zheng, Q., Liu, B. and Gao, X., 2022. EEG conformer: Convolutional transformer for EEG decoding and visualization. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 31, pp.710-719. https://ieeexplore.ieee.org/document/9991178

[ConformerCode]Song, Y., Zheng, Q., Liu, B. and Gao, X., 2022. EEG conformer: Convolutional transformer for EEG decoding and visualization. eeyhsong/EEG-Conformer.

- forward(x: Tensor) Tensor[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Parameters:

x – The description is missing.

- class braindecode.models.EEGITNet(n_outputs=None, n_chans=None, n_times=None, chs_info=None, input_window_seconds=None, sfreq=None, n_filters_time: int = 2, kernel_length: int = 16, pool_kernel: int = 4, tcn_in_channel: int = 14, tcn_kernel_size: int = 4, tcn_padding: int = 3, drop_prob: float = 0.4, tcn_dilatation: int = 1, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>)[source]#

Bases:

EEGModuleMixin,SequentialEEG-ITNet from Salami, et al (2022) [Salami2022]

EEG-ITNet: An Explainable Inception Temporal Convolutional Network for motor imagery classification from Salami et al. 2022.

See [Salami2022] for details.

Code adapted from abbassalami/eeg-itnet

- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

n_times (int) – Number of time samples of the input window.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

n_filters_time – The description is missing.

kernel_length (int, optional) – Kernel length for inception branches. Determines the temporal receptive field. Default is 16.

pool_kernel (int, optional) – Pooling kernel size for the average pooling layer. Default is 4.

tcn_in_channel (int, optional) – Number of input channels for Temporal Convolutional (TC) blocks. Default is 14.

tcn_kernel_size (int, optional) – Kernel size for the TC blocks. Determines the temporal receptive field. Default is 4.

tcn_padding (int, optional) – Padding size for the TC blocks to maintain the input dimensions. Default is 3.

drop_prob (float, optional) – Dropout probability applied after certain layers to prevent overfitting. Default is 0.4.

tcn_dilatation (int, optional) – Dilation rate for the first TC block. Subsequent blocks will have dilation rates multiplied by powers of 2. Default is 1.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented from the paper based on author implementation.

References

- class braindecode.models.EEGInceptionERP(n_chans=None, n_outputs=None, n_times=1000, sfreq=128, drop_prob=0.5, scales_samples_s=(0.5, 0.25, 0.125), n_filters=8, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, batch_norm_alpha=0.01, depth_multiplier=2, pooling_sizes=(4, 2, 2, 2), chs_info=None, input_window_seconds=None)[source]#

Bases:

EEGModuleMixin,SequentialEEG Inception for ERP-based from Santamaria-Vazquez et al (2020) [santamaria2020].

The code for the paper and this model is also available at [santamaria2020] and an adaptation for PyTorch [2].

The model is strongly based on the original InceptionNet for an image. The main goal is to extract features in parallel with different scales. The authors extracted three scales proportional to the window sample size. The network had three parts: 1-larger inception block largest, 2-smaller inception block followed by 3-bottleneck for classification.

One advantage of the EEG-Inception block is that it allows a network to learn simultaneous components of low and high frequency associated with the signal. The winners of BEETL Competition/NeurIps 2021 used parts of the model [beetl].

The model is fully described in [santamaria2020].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int, optional) – Size of the input, in number of samples. Set to 128 (1s) as in [santamaria2020].

sfreq (float, optional) – EEG sampling frequency. Defaults to 128 as in [santamaria2020].

drop_prob (float, optional) – Dropout rate inside all the network. Defaults to 0.5 as in [santamaria2020].

scales_samples_s (list(float), optional) – Windows for inception block. Temporal scale (s) of the convolutions on each Inception module. This parameter determines the kernel sizes of the filters. Defaults to 0.5, 0.25, 0.125 seconds, as in [santamaria2020].

n_filters (int, optional) – Initial number of convolutional filters. Defaults to 8 as in [santamaria2020].

activation (nn.Module, optional) – Activation function. Defaults to ELU activation as in [santamaria2020].

batch_norm_alpha (float, optional) – Momentum for BatchNorm2d. Defaults to 0.01.

depth_multiplier (int, optional) – Depth multiplier for the depthwise convolution. Defaults to 2 as in [santamaria2020].

pooling_sizes (list(int), optional) – Pooling sizes for the inception blocks. Defaults to 4, 2, 2 and 2, as in [santamaria2020].

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented from the paper based on [2].

References

[santamaria2020] (1,2,3,4,5,6,7,8,9,10,11)Santamaria-Vazquez, E., Martinez-Cagigal, V., Vaquerizo-Villar, F., & Hornero, R. (2020). EEG-inception: A novel deep convolutional neural network for assistive ERP-based brain-computer interfaces. IEEE Transactions on Neural Systems and Rehabilitation Engineering , v. 28. Online: http://dx.doi.org/10.1109/TNSRE.2020.3048106

[beetl]Wei, X., Faisal, A.A., Grosse-Wentrup, M., Gramfort, A., Chevallier, S., Jayaram, V., Jeunet, C., Bakas, S., Ludwig, S., Barmpas, K., Bahri, M., Panagakis, Y., Laskaris, N., Adamos, D.A., Zafeiriou, S., Duong, W.C., Gordon, S.M., Lawhern, V.J., Śliwowski, M., Rouanne, V. & Tempczyk, P. (2022). 2021 BEETL Competition: Advancing Transfer Learning for Subject Independence & Heterogeneous EEG Data Sets. Proceedings of the NeurIPS 2021 Competitions and Demonstrations Track, in Proceedings of Machine Learning Research 176:205-219 Available from https://proceedings.mlr.press/v176/wei22a.html.

- class braindecode.models.EEGInceptionMI(n_chans=None, n_outputs=None, input_window_seconds=None, sfreq=250, n_convs: int = 5, n_filters: int = 48, kernel_unit_s: float = 0.1, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ReLU'>, chs_info=None, n_times=None)[source]#

Bases:

EEGModuleMixin,ModuleEEG Inception for Motor Imagery, as proposed in Zhang et al. (2021) [1]

The model is strongly based on the original InceptionNet for computer vision. The main goal is to extract features in parallel with different scales. The network has two blocks made of 3 inception modules with a skip connection.

The model is fully described in [1].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

input_window_seconds (float, optional) – Size of the input, in seconds. Set to 4.5 s as in [1] for dataset BCI IV 2a.

sfreq (float, optional) – EEG sampling frequency in Hz. Defaults to 250 Hz as in [1] for dataset BCI IV 2a.

n_convs (int, optional) – Number of convolution per inception wide branching. Defaults to 5 as in [1] for dataset BCI IV 2a.

n_filters (int, optional) – Number of convolutional filters for all layers of this type. Set to 48 as in [1] for dataset BCI IV 2a.

kernel_unit_s (float, optional) – Size in seconds of the basic 1D convolutional kernel used in inception modules. Each convolutional layer in such modules have kernels of increasing size, odd multiples of this value (e.g. 0.1, 0.3, 0.5, 0.7, 0.9 here for

n_convs=5). Defaults to 0.1 s.activation (nn.Module) – Activation function. Defaults to ReLU activation.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented bosed on the paper [1].

References

- forward(X: Tensor) Tensor[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.- Parameters:

X – The description is missing.

- class braindecode.models.EEGMiner(method: str = 'plv', n_chans=None, n_outputs=None, n_times=None, chs_info=None, input_window_seconds=None, sfreq=None, filter_f_mean=(23.0, 23.0), filter_bandwidth=(44.0, 44.0), filter_shape=(2.0, 2.0), group_delay=(20.0, 20.0), clamp_f_mean=(1.0, 45.0))[source]#

Bases:

EEGModuleMixin,ModuleEEGMiner from Ludwig et al (2024) [eegminer].

EEGMiner is a neural network model for EEG signal classification using learnable generalized Gaussian filters. The model leverages frequency domain filtering and connectivity metrics or feature extraction, such as Phase Locking Value (PLV) to extract meaningful features from EEG data, enabling effective classification tasks.

The model has the following steps:

Generalized Gaussian filters in the frequency domain to the input EEG signals.

- Connectivity estimators (corr, plv) or Electrode-Wise Band Power (mag), by default (plv).

‘corr’: Computes the correlation of the filtered signals.

‘plv’: Computes the phase locking value of the filtered signals.

‘mag’: Computes the magnitude of the filtered signals.

- Feature Normalization

Apply batch normalization.

- Final Layer

Feeds the batch-normalized features into a final linear layer for classification.

Depending on the selected method (mag, corr, or plv), it computes the filtered signals’ magnitude, correlation, or phase locking value. These features are then normalized and passed through a batch normalization layer before being fed into a final linear layer for classification.

The input to EEGMiner should be a three-dimensional tensor representing EEG signals:

(batch_size, n_channels, n_timesteps).- Parameters:

method (str, default="plv") – The method used for feature extraction. Options are: - “mag”: Electrode-Wise band power of the filtered signals. - “corr”: Correlation between filtered channels. - “plv”: Phase Locking Value connectivity metric.

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

filter_f_mean (list of float, default=[23.0, 23.0]) – Mean frequencies for the generalized Gaussian filters.

filter_bandwidth (list of float, default=[44.0, 44.0]) – Bandwidths for the generalized Gaussian filters.

filter_shape (list of float, default=[2.0, 2.0]) – Shape parameters for the generalized Gaussian filters.

group_delay (tuple of float, default=(20.0, 20.0)) – Group delay values for the filters in milliseconds.

clamp_f_mean (tuple of float, default=(1.0, 45.0)) – Clamping range for the mean frequency parameters.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

EEGMiner incorporates learnable parameters for filter characteristics, allowing the model to adaptively learn optimal frequency bands and phase delays for the classification task. By default, using the PLV as a connectivity metric makes EEGMiner suitable for tasks requiring the analysis of phase relationships between different EEG channels.

The model and the module have patent [eegminercode], and the code is CC BY-NC 4.0.

Added in version 0.9.

References

[eegminer]Ludwig, S., Bakas, S., Adamos, D. A., Laskaris, N., Panagakis, Y., & Zafeiriou, S. (2024). EEGMiner: discovering interpretable features of brain activity with learnable filters. Journal of Neural Engineering, 21(3), 036010.

[eegminercode]Ludwig, S., Bakas, S., Adamos, D. A., Laskaris, N., Panagakis, Y., & Zafeiriou, S. (2024). EEGMiner: discovering interpretable features of brain activity with learnable filters. SMLudwig/EEGminer. Cogitat, Ltd. “Learnable filters for EEG classification.” Patent GB2609265. https://www.ipo.gov.uk/p-ipsum/Case/ApplicationNumber/GB2113420.0

- class braindecode.models.EEGModuleMixin(n_outputs: int | None = None, n_chans: int | None = None, chs_info=None, n_times: int | None = None, input_window_seconds: float | None = None, sfreq: float | None = None)[source]#

Bases:

objectMixin class for all EEG models in braindecode.

- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

- get_output_shape() tuple[int, ...][source]#

Returns shape of neural network output for batch size equal 1.

- get_torchinfo_statistics(col_names: Iterable[str] | None = ('input_size', 'output_size', 'num_params', 'kernel_size'), row_settings: Iterable[str] | None = ('var_names', 'depth')) ModelStatistics[source]#

Generate table describing the model using torchinfo.summary.

- Parameters:

col_names (tuple, optional) – Specify which columns to show in the output, see torchinfo for details, by default (“input_size”, “output_size”, “num_params”, “kernel_size”)

row_settings (tuple, optional) – Specify which features to show in a row, see torchinfo for details, by default (“var_names”, “depth”)

- Returns:

ModelStatistics generated by torchinfo.summary.

- Return type:

torchinfo.ModelStatistics

- to_dense_prediction_model(axis: tuple[int, ...] | int = (2, 3)) None[source]#

Transform a sequential model with strides to a model that outputs dense predictions by removing the strides and instead inserting dilations. Modifies model in-place.

- Parameters:

axis (int or (int,int)) – Axis to transform (in terms of intermediate output axes) can either be 2, 3, or (2,3).

Notes

Does not yet work correctly for average pooling. Prior to version 0.1.7, there had been a bug that could move strides backwards one layer.

- class braindecode.models.EEGNeX(n_chans=None, n_outputs=None, n_times=None, chs_info=None, input_window_seconds=None, sfreq=None, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, depth_multiplier: int = 2, filter_1: int = 8, filter_2: int = 32, drop_prob: float = 0.5, kernel_block_1_2: int = 64, kernel_block_4: int = 16, dilation_block_4: int = 2, avg_pool_block4: int = 4, kernel_block_5: int = 16, dilation_block_5: int = 4, avg_pool_block5: int = 8, max_norm_conv: float = 1.0, max_norm_linear: float = 0.25)[source]#

Bases:

EEGModuleMixin,ModuleEEGNeX model from Chen et al. (2024) [eegnex].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

activation (nn.Module, optional) – Activation function to use. Default is nn.ELU.

depth_multiplier (int, optional) – Depth multiplier for the depthwise convolution. Default is 2.

filter_1 (int, optional) – Number of filters in the first convolutional layer. Default is 8.

filter_2 (int, optional) – Number of filters in the second convolutional layer. Default is 32.

drop_prob (float, optional) – Dropout rate. Default is 0.5.

kernel_block_1_2 – The description is missing.

kernel_block_4 (tuple[int, int], optional) – Kernel size for block 4. Default is (1, 16).

dilation_block_4 (tuple[int, int], optional) – Dilation rate for block 4. Default is (1, 2).

avg_pool_block4 (tuple[int, int], optional) – Pooling size for block 4. Default is (1, 4).

kernel_block_5 (tuple[int, int], optional) – Kernel size for block 5. Default is (1, 16).

dilation_block_5 (tuple[int, int], optional) – Dilation rate for block 5. Default is (1, 4).

avg_pool_block5 (tuple[int, int], optional) – Pooling size for block 5. Default is (1, 8).

max_norm_conv – The description is missing.

max_norm_linear – The description is missing.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented from the paper description and source code in tensorflow [EEGNexCode].

References

[eegnex]Chen, X., Teng, X., Chen, H., Pan, Y., & Geyer, P. (2024). Toward reliable signals decoding for electroencephalogram: A benchmark study to EEGNeX. Biomedical Signal Processing and Control, 87, 105475.

[EEGNexCode]Chen, X., Teng, X., Chen, H., Pan, Y., & Geyer, P. (2024). Toward reliable signals decoding for electroencephalogram: A benchmark study to EEGNeX. chenxiachan/EEGNeX

- forward(x: Tensor) Tensor[source]#

Forward pass of the EEGNeX model.

- Parameters:

x (torch.Tensor) – Input tensor of shape (batch_size, n_chans, n_times).

- Returns:

Output tensor of shape (batch_size, n_outputs).

- Return type:

- class braindecode.models.EEGNetv1(n_chans=None, n_outputs=None, n_times=None, final_conv_length='auto', pool_mode='max', second_kernel_size=(2, 32), third_kernel_size=(8, 4), drop_prob=0.25, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, chs_info=None, input_window_seconds=None, sfreq=None)[source]#

Bases:

EEGModuleMixin,SequentialEEGNet model from Lawhern et al. 2016 from [EEGNet].

See details in [EEGNet].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

final_conv_length – The description is missing.

pool_mode – The description is missing.

second_kernel_size – The description is missing.

third_kernel_size – The description is missing.

drop_prob – The description is missing.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct, has not been checked by original authors, only reimplemented from the paper description.

References

- class braindecode.models.EEGNetv4(n_chans: int | None = None, n_outputs: int | None = None, n_times: int | None = None, final_conv_length: str | int = 'auto', pool_mode: str = 'mean', F1: int = 8, D: int = 2, F2: int | None = None, kernel_length: int = 64, *, depthwise_kernel_length: int = 16, pool1_kernel_size: int = 4, pool2_kernel_size: int = 8, conv_spatial_max_norm: int = 1, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, batch_norm_momentum: float = 0.01, batch_norm_affine: bool = True, batch_norm_eps: float = 0.001, drop_prob: float = 0.25, final_layer_with_constraint: bool = False, norm_rate: float = 0.25, chs_info: list[~typing.Dict] | None = None, input_window_seconds=None, sfreq=None, **kwargs)[source]#

Bases:

EEGModuleMixin,SequentialEEGNet v4 model from Lawhern et al. (2018) [EEGNet4].

See details in [EEGNet4].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

final_conv_length (int or "auto", default="auto") – Length of the final convolution layer. If “auto”, it is set based on n_times.

pool_mode ({"mean", "max"}, default="mean") – Pooling method to use in pooling layers.

F1 (int, default=8) – Number of temporal filters in the first convolutional layer.

D (int, default=2) – Depth multiplier for the depthwise convolution.

F2 (int or None, default=None) – Number of pointwise filters in the separable convolution. Usually set to

F1 * D.kernel_length (int, default=64) – Length of the temporal convolution kernel.

depthwise_kernel_length (int, default=16) – Length of the depthwise convolution kernel in the separable convolution.

pool1_kernel_size (int, default=4) – Kernel size of the first pooling layer.

pool2_kernel_size (int, default=8) – Kernel size of the second pooling layer.

conv_spatial_max_norm (float, default=1) – Maximum norm constraint for the spatial (depthwise) convolution.

activation (nn.Module, default=nn.ELU) – Non-linear activation function to be used in the layers.

batch_norm_momentum (float, default=0.01) – Momentum for instance normalization in batch norm layers.

batch_norm_affine (bool, default=True) – If True, batch norm has learnable affine parameters.

batch_norm_eps (float, default=1e-3) – Epsilon for numeric stability in batch norm layers.

drop_prob (float, default=0.25) – Dropout probability.

final_layer_with_constraint (bool, default=False) – If

False, uses a convolution-based classification layer. IfTrue, apply a flattened linear layer with constraint on the weights norm as the final classification step.norm_rate (float, default=0.25) – Max-norm constraint value for the linear layer (used if

final_layer_conv=False).chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

**kwargs – The description is missing.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

- class braindecode.models.EEGResNet(n_chans=None, n_outputs=None, n_times=None, final_pool_length='auto', n_first_filters=20, n_layers_per_block=2, first_filter_length=3, activation=<class 'torch.nn.modules.activation.ELU'>, split_first_layer=True, batch_norm_alpha=0.1, batch_norm_epsilon=0.0001, conv_weight_init_fn=<function EEGResNet.<lambda>>, chs_info=None, input_window_seconds=None, sfreq=250)[source]#

Bases:

EEGModuleMixin,SequentialEEGResNet from Schirrmeister et al. 2017 [Schirrmeister2017].

Model described in [Schirrmeister2017].

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

final_pool_length – The description is missing.

n_first_filters – The description is missing.

n_layers_per_block – The description is missing.

first_filter_length – The description is missing.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.split_first_layer – The description is missing.

batch_norm_alpha – The description is missing.

batch_norm_epsilon – The description is missing.

conv_weight_init_fn – The description is missing.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[Schirrmeister2017] (1,2)Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., Hutter, F. & Ball, T. (2017). Deep learning with convolutional neural networks for , EEG decoding and visualization. Human Brain Mapping, Aug. 2017. Online: http://dx.doi.org/10.1002/hbm.23730

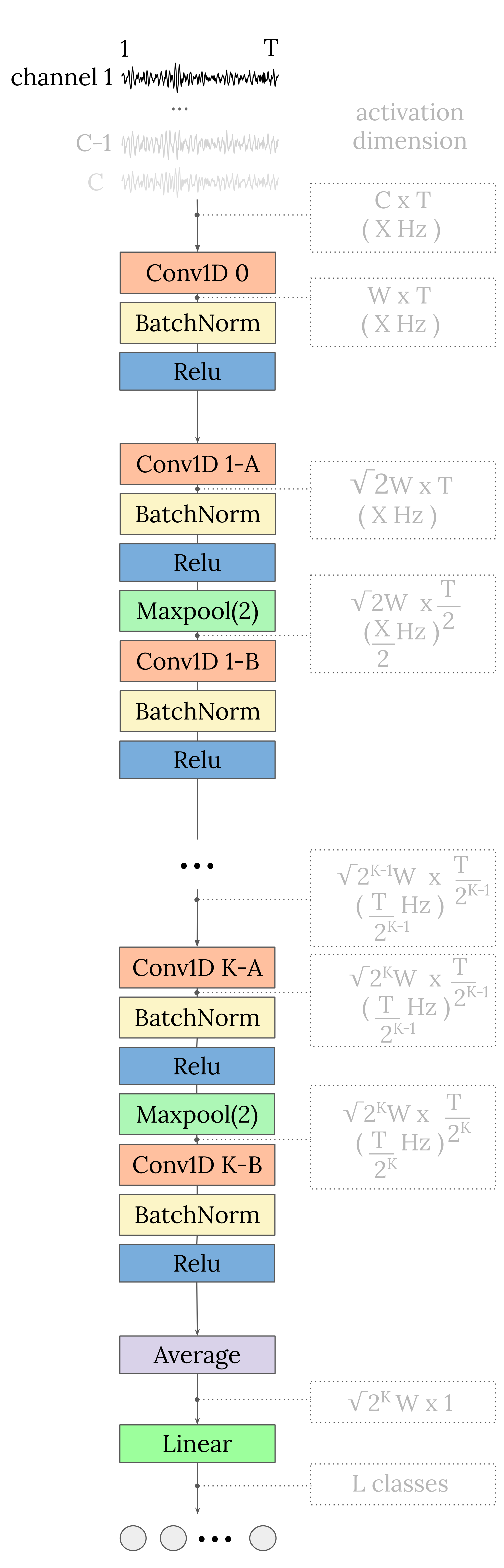

- class braindecode.models.EEGSimpleConv(n_outputs=None, n_chans=None, sfreq=None, feature_maps=128, n_convs=2, resampling_freq=80, kernel_size=8, return_feature=False, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ReLU'>, chs_info=None, n_times=None, input_window_seconds=None)[source]#

Bases:

EEGModuleMixin,ModuleEEGSimpleConv from Ouahidi, YE et al. (2023) [Yassine2023].

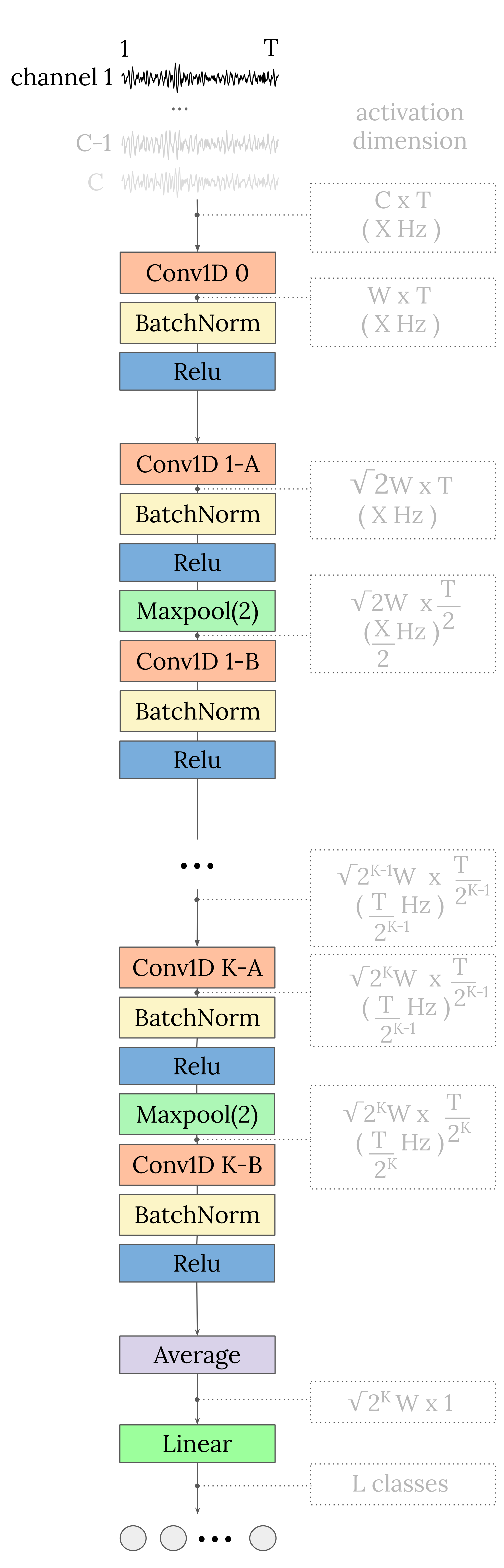

EEGSimpleConv is a 1D Convolutional Neural Network originally designed for decoding motor imagery from EEG signals. The model aims to have a very simple and straightforward architecture that allows a low latency, while still achieving very competitive performance.

EEG-SimpleConv starts with a 1D convolutional layer, where each EEG channel enters a separate 1D convolutional channel. This is followed by a series of blocks of two 1D convolutional layers. Between the two convolutional layers of each block is a max pooling layer, which downsamples the data by a factor of 2. Each convolution is followed by a batch normalisation layer and a ReLU activation function. Finally, a global average pooling (in the time domain) is performed to obtain a single value per feature map, which is then fed into a linear layer to obtain the final classification prediction output.

The paper and original code with more details about the methodological choices are available at the [Yassine2023] and [Yassine2023Code].

The input shape should be three-dimensional matrix representing the EEG signals.

(batch_size, n_channels, n_timesteps).- Parameters:

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_chans (int) – Number of EEG channels.

sfreq (float) – Sampling frequency of the EEG recordings.

feature_maps (int) – Number of Feature Maps at the first Convolution, width of the model.

n_convs (int) – Number of blocks of convolutions (2 convolutions per block), depth of the model.

resampling_freq – The description is missing.

kernel_size (int) – Size of the convolutions kernels.

return_feature – The description is missing.

activation (nn.Module, default=nn.ELU) – Activation function class to apply. Should be a PyTorch activation module class like

nn.ReLUornn.ELU. Default isnn.ELU.chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

The authors recommend using the default parameters for MI decoding. Please refer to the original paper and code for more details.

Recommended range for the choice of the hyperparameters, regarding the evaluation paradigm.

Parameter | Within-Subject | Cross-Subject |feature_maps | [64-144] | [64-144] |n_convs | 1 | [2-4] |resampling_freq | [70-100] | [50-80] |kernel_size | [12-17] | [5-8] |An intensive ablation study is included in the paper to understand the of each parameter on the model performance.

Added in version 0.9.

References

[Yassine2023] (1,2)Yassine El Ouahidi, V. Gripon, B. Pasdeloup, G. Bouallegue N. Farrugia, G. Lioi, 2023. A Strong and Simple Deep Learning Baseline for BCI Motor Imagery Decoding. Arxiv preprint. arxiv.org/abs/2309.07159

[Yassine2023Code]Yassine El Ouahidi, V. Gripon, B. Pasdeloup, G. Bouallegue N. Farrugia, G. Lioi, 2023. A Strong and Simple Deep Learning Baseline for BCI Motor Imagery Decoding. GitHub repository. elouayas/EEGSimpleConv.

- class braindecode.models.EEGTCNet(n_chans=None, n_outputs=None, n_times=None, chs_info=None, input_window_seconds=None, sfreq=None, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, depth_multiplier: int = 2, filter_1: int = 8, kern_length: int = 64, drop_prob: float = 0.5, depth: int = 2, kernel_size: int = 4, filters: int = 12, max_norm_const: float = 0.25)[source]#

Bases:

EEGModuleMixin,ModuleEEGTCNet model from Ingolfsson et al. (2020) [ingolfsson2020].

Combining EEGNet and TCN blocks.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

n_times (int) – Number of time samples of the input window.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

activation (nn.Module, optional) – Activation function to use. Default is nn.ELU().

depth_multiplier (int, optional) – Depth multiplier for the depthwise convolution. Default is 2.

filter_1 (int, optional) – Number of temporal filters in the first convolutional layer. Default is 8.

kern_length (int, optional) – Length of the temporal kernel in the first convolutional layer. Default is 64.

drop_prob – The description is missing.

depth (int, optional) – Number of residual blocks in the TCN. Default is 2.

kernel_size (int, optional) – Size of the temporal convolutional kernel in the TCN. Default is 4.

filters (int, optional) – Number of filters in the TCN convolutional layers. Default is 12.

max_norm_const (float) – Maximum L2-norm constraint imposed on weights of the last fully-connected layer. Defaults to 0.25.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

If some input signal-related parameters are not specified, there will be an attempt to infer them from the other parameters.

References

[ingolfsson2020]Ingolfsson, T. M., Hersche, M., Wang, X., Kobayashi, N., Cavigelli, L., & Benini, L. (2020). EEG-TCNet: An accurate temporal convolutional network for embedded motor-imagery brain–machine interfaces. https://doi.org/10.48550/arXiv.2006.00622

- forward(x: Tensor) Tensor[source]#

Forward pass of the EEGTCNet model.

- Parameters:

x (torch.Tensor) – Input tensor of shape (batch_size, n_chans, n_times).

- Returns:

Output tensor of shape (batch_size, n_outputs).

- Return type:

- class braindecode.models.FBCNet(n_chans=None, n_outputs=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, n_bands=9, n_filters_spat: int = 32, temporal_layer: str = 'LogVarLayer', n_dim: int = 3, stride_factor: int = 4, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.SiLU'>, linear_max_norm: float = 0.5, cnn_max_norm: float = 2.0, filter_parameters: dict[~typing.Any, ~typing.Any] | None = None)[source]#

Bases:

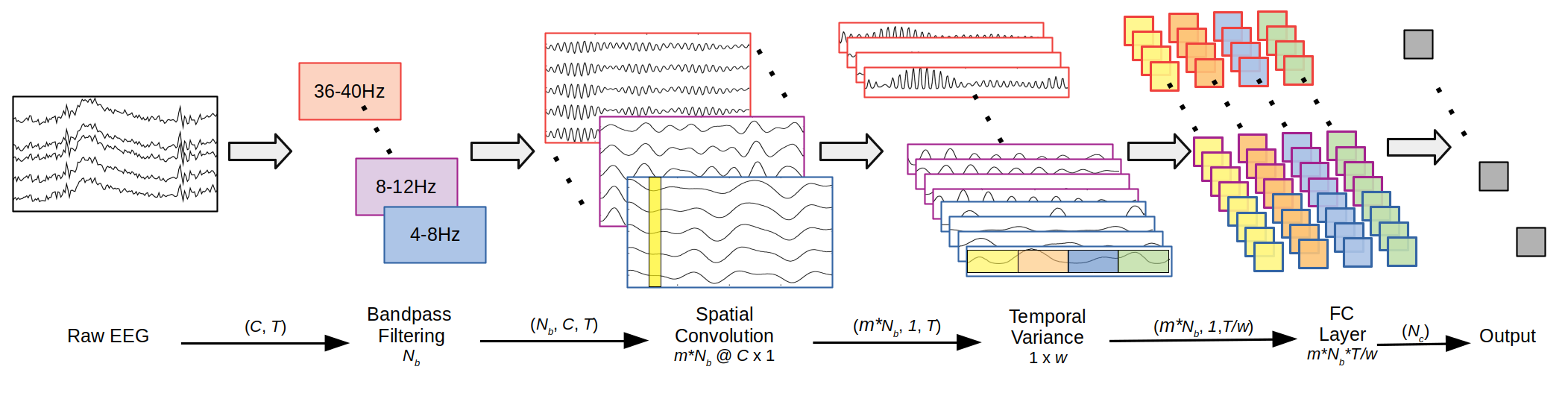

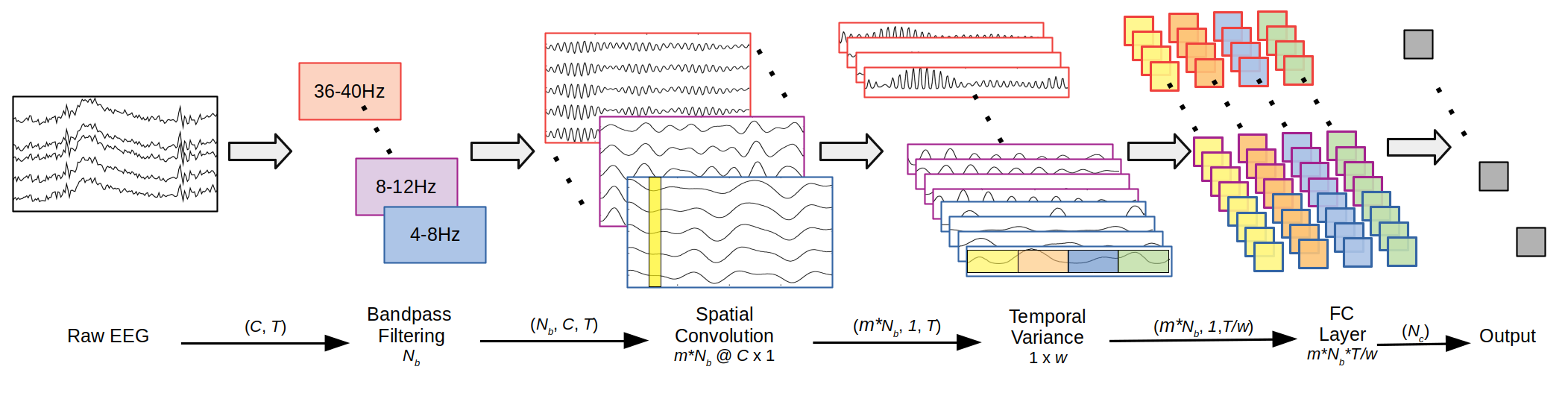

EEGModuleMixin,ModuleFBCNet from Mane, R et al (2021) [fbcnet2021].

The FBCNet model applies spatial convolution and variance calculation along the time axis, inspired by the Filter Bank Common Spatial Pattern (FBCSP) algorithm.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

n_bands (int or None or list[tuple[int, int]]], default=9) – Number of frequency bands. Could

n_filters_spat (int, default=32) – Number of spatial filters for the first convolution.

temporal_layer (str, default='LogVarLayer') – Type of temporal aggregator layer. Options: ‘VarLayer’, ‘StdLayer’, ‘LogVarLayer’, ‘MeanLayer’, ‘MaxLayer’.

n_dim (int, default=3) – Number of dimensions for the temporal reductor

stride_factor (int, default=4) – Stride factor for reshaping.

activation (nn.Module, default=nn.SiLU) – Activation function class to apply in Spatial Convolution Block.

linear_max_norm (float, default=0.5) – Maximum norm for the final linear layer.

cnn_max_norm (float, default=2.0) – Maximum norm for the spatial convolution layer.

filter_parameters (dict, default None) – Parameters for the FilterBankLayer

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct and has not been checked by the original authors; it has only been reimplemented from the paper description and source code [fbcnetcode2021]. There is a difference in the activation function; in the paper, the ELU is used as the activation function, but in the original code, SiLU is used. We followed the code.

References

[fbcnet2021]Mane, R., Chew, E., Chua, K., Ang, K. K., Robinson, N., Vinod, A. P., … & Guan, C. (2021). FBCNet: A multi-view convolutional neural network for brain-computer interface. preprint arXiv:2104.01233.

[fbcnetcode2021]Link to source-code: ravikiran-mane/FBCNet

- forward(x: Tensor) Tensor[source]#

Forward pass of the FBCNet model.

- Parameters:

x (torch.Tensor) – Input tensor with shape (batch_size, n_chans, n_times).

- Returns:

Output tensor with shape (batch_size, n_outputs).

- Return type:

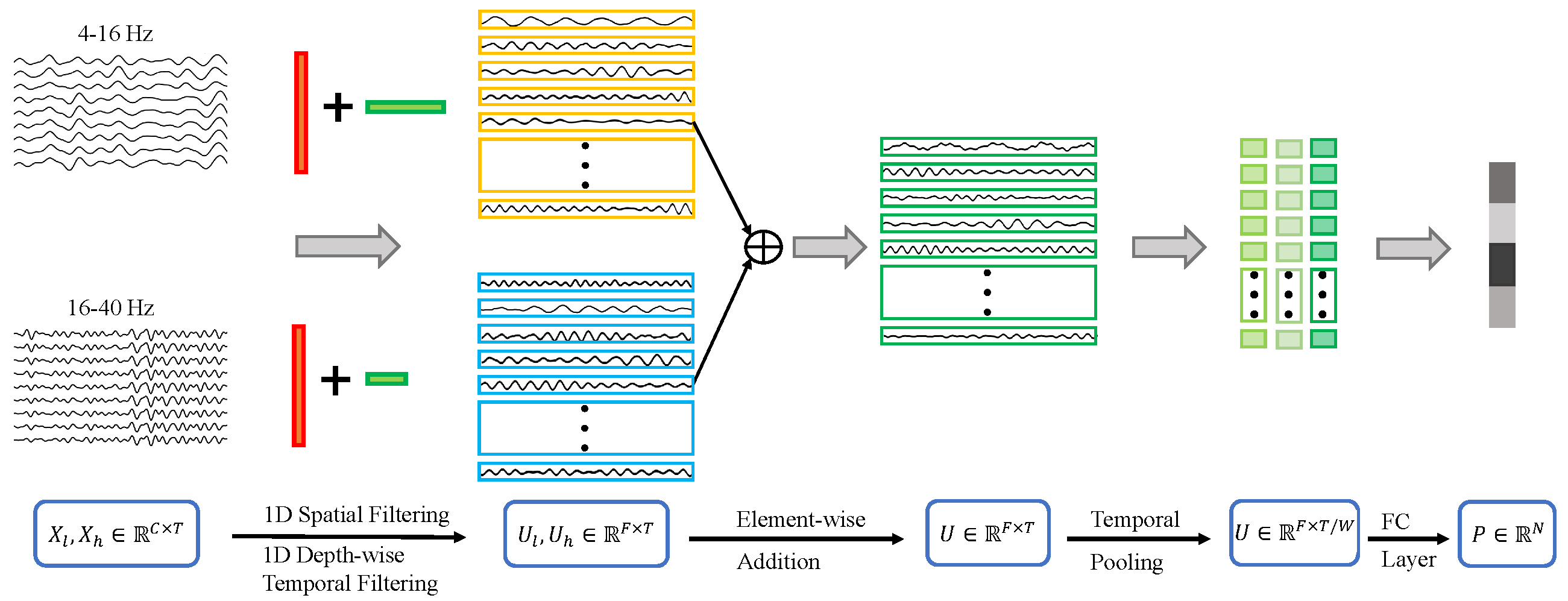

- class braindecode.models.FBLightConvNet(n_chans=None, n_outputs=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, n_bands=9, n_filters_spat: int = 32, n_dim: int = 3, stride_factor: int = 4, win_len: int = 250, heads: int = 8, weight_softmax: bool = True, bias: bool = False, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ELU'>, verbose: bool = False, filter_parameters: dict | None = None)[source]#

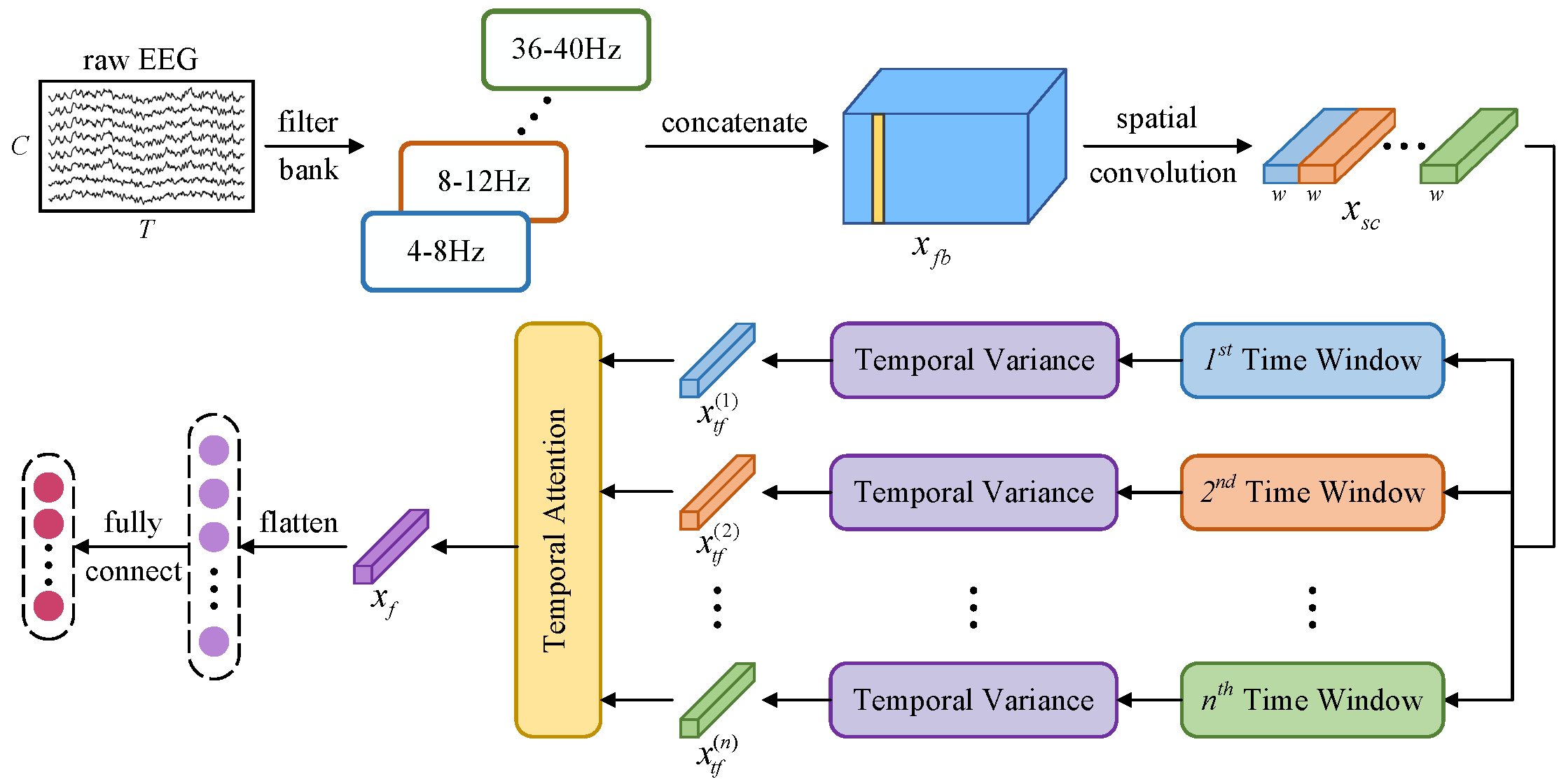

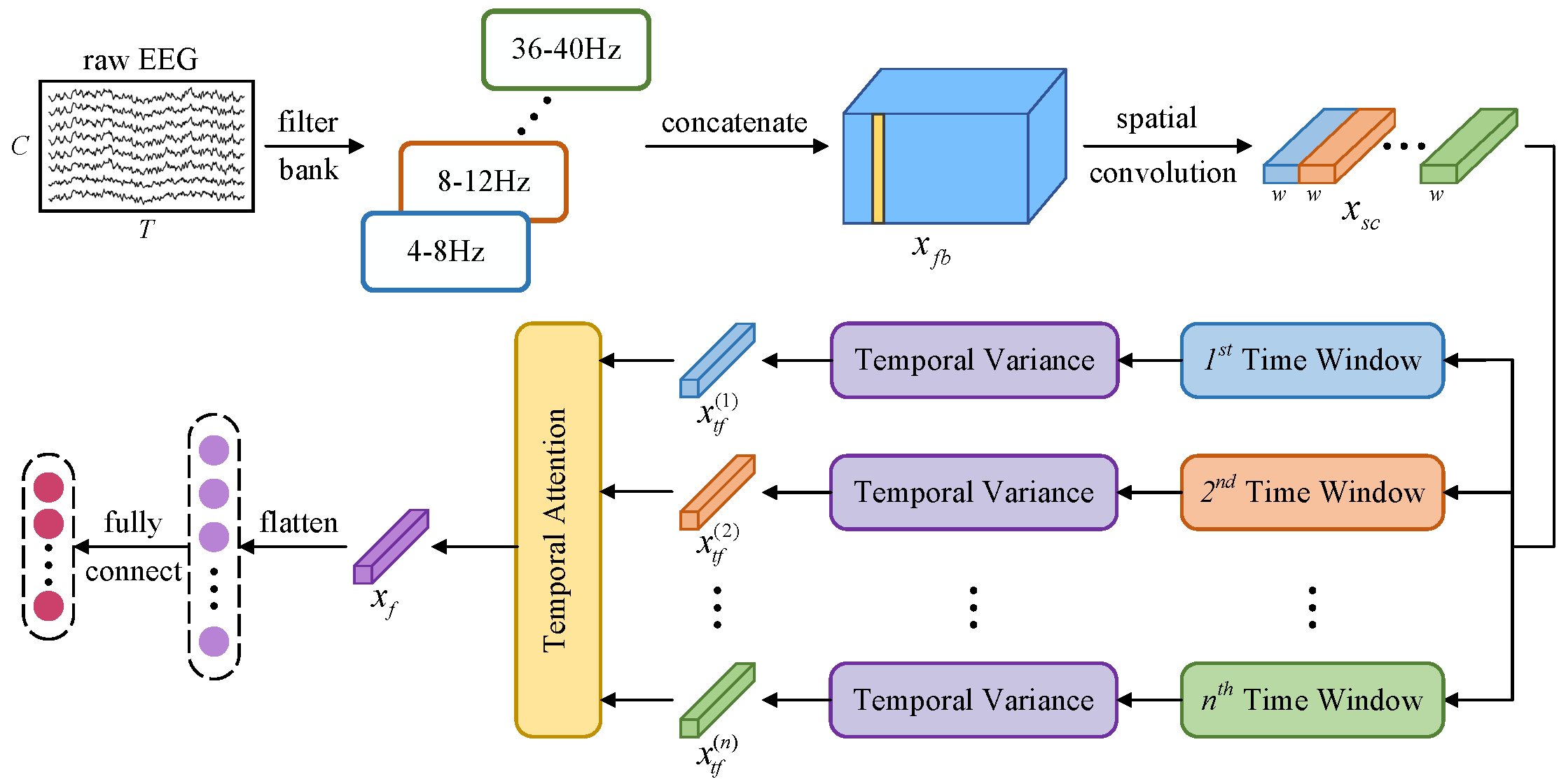

Bases:

EEGModuleMixin,ModuleLightConvNet from Ma, X et al (2023) [lightconvnet].

A lightweight convolutional neural network incorporating temporal dependency learning and attention mechanisms. The architecture is designed to efficiently capture spatial and temporal features through specialized convolutional layers and multi-head attention.

The network architecture consists of four main modules:

- Spatial and Spectral Information Learning:

Applies filterbank and spatial convolutions. This module is followed by batch normalization and an activation function to enhance feature representation.

- Temporal Segmentation and Feature Extraction:

Divides the processed data into non-overlapping temporal windows. Within each window, a variance-based layer extracts discriminative features, which are then log-transformed to stabilize variance before being passed to the attention module.

- Temporal Attention Module: Utilizes a multi-head attention

mechanism with depthwise separable convolutions to capture dependencies across different temporal segments. The attention weights are normalized using softmax and aggregated to form a comprehensive temporal representation.

- Final Layer: Flattens the aggregated features and passes them

through a linear layer to with kernel sizes matching the input dimensions to integrate features across different channels generate the final output predictions.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

n_bands (int or None or list of tuple of int, default=8) – Number of frequency bands or a list of frequency band tuples. If a list of tuples is provided, each tuple defines the lower and upper bounds of a frequency band.

n_filters_spat (int, default=32) – Number of spatial filters in the depthwise convolutional layer.

n_dim (int, default=3) – Number of dimensions for the temporal reduction layer.

stride_factor (int, default=4) – Stride factor used for reshaping the temporal dimension.

win_len – The description is missing.

heads (int, default=8) – Number of attention heads in the multi-head attention mechanism.

weight_softmax (bool, default=True) – If True, applies softmax to the attention weights.

bias (bool, default=False) – If True, includes a bias term in the convolutional layers.

activation (nn.Module, default=nn.ELU) – Activation function class to apply after convolutional layers.

verbose (bool, default=False) – If True, enables verbose output during filter creation using mne.

filter_parameters (dict, default={}) – Additional parameters for the FilterBankLayer.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct and has not been checked by the original authors; it is a braindecode adaptation from the Pytorch source-code [lightconvnetcode].

References

[lightconvnet]Ma, X., Chen, W., Pei, Z., Liu, J., Huang, B., & Chen, J. (2023). A temporal dependency learning CNN with attention mechanism for MI-EEG decoding. IEEE Transactions on Neural Systems and Rehabilitation Engineering.

[lightconvnetcode]Link to source-code: Ma-Xinzhi/LightConvNet

- forward(x: Tensor) Tensor[source]#

Forward pass of the FBLightConvNet model.

- Parameters:

x (torch.Tensor) – Input tensor with shape (batch_size, n_chans, n_times).

- Returns:

Output tensor with shape (batch_size, n_outputs).

- Return type:

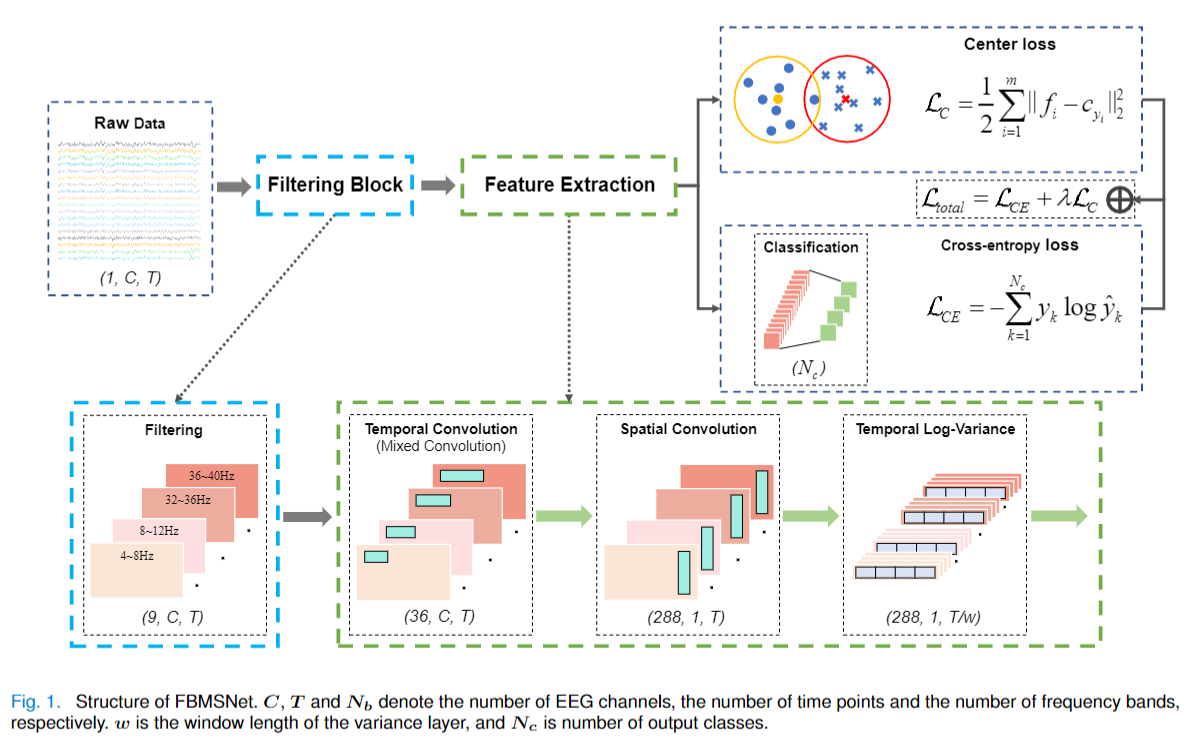

- class braindecode.models.FBMSNet(n_chans=None, n_outputs=None, chs_info=None, n_times=None, input_window_seconds=None, sfreq=None, n_bands: int = 9, n_filters_spat: int = 36, temporal_layer: str = 'LogVarLayer', n_dim: int = 3, stride_factor: int = 4, dilatability: int = 8, activation: ~torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.SiLU'>, kernels_weights: ~typing.Sequence[int] = (15, 31, 63, 125), cnn_max_norm: float = 2, linear_max_norm: float = 0.5, verbose: bool = False, filter_parameters: dict | None = None)[source]#

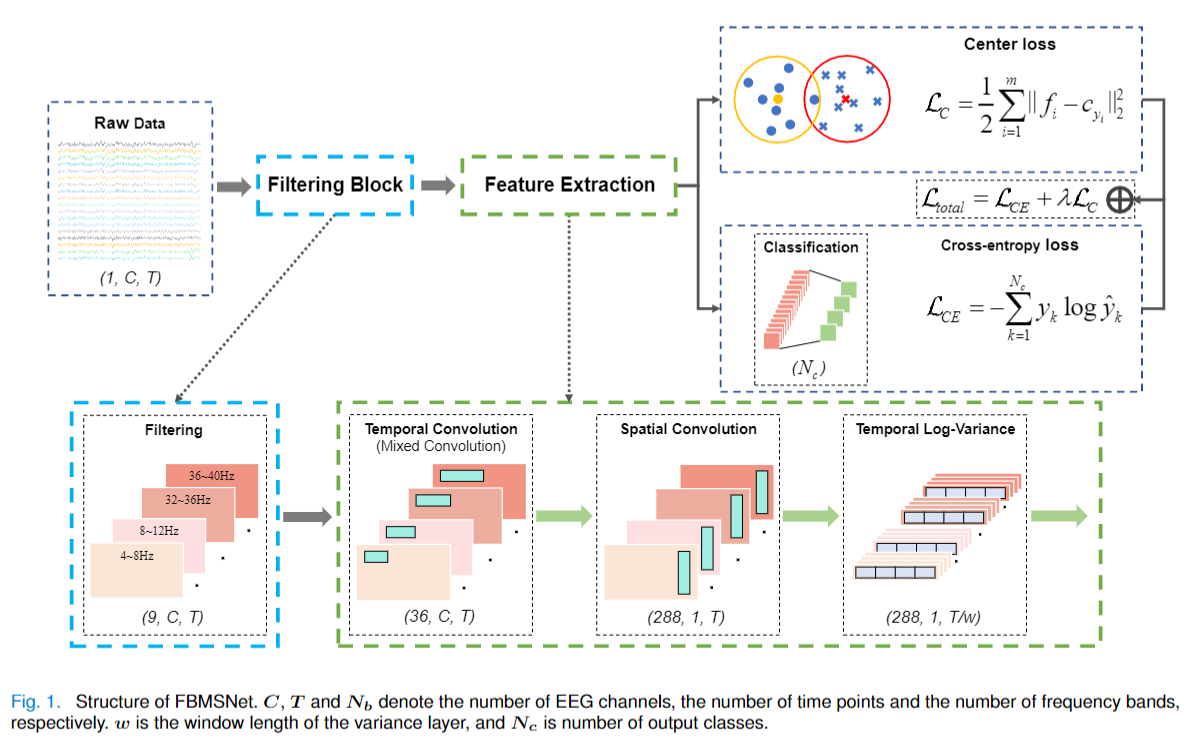

Bases:

EEGModuleMixin,ModuleFBMSNet from Liu et al (2022) [fbmsnet].

FilterBank Layer: Applying filterbank to transform the input.

Temporal Convolution Block: Utilizes mixed depthwise convolution (MixConv) to extract multiscale temporal features from multiview EEG representations. The input is split into groups corresponding to different views each convolved with kernels of varying sizes. Kernel sizes are set relative to the EEG sampling rate, with ratio coefficients [0.5, 0.25, 0.125, 0.0625], dividing the input into four groups.

Spatial Convolution Block: Applies depthwise convolution with a kernel size of (n_chans, 1) to span all EEG channels, effectively learning spatial filters. This is followed by batch normalization and the Swish activation function. A maximum norm constraint of 2 is imposed on the convolution weights to regularize the model.

Temporal Log-Variance Block: Computes the log-variance.

Classification Layer: A fully connected with weight constraint.

- Parameters:

n_chans (int) – Number of EEG channels.

n_outputs (int) – Number of outputs of the model. This is the number of classes in the case of classification.

chs_info (list of dict) – Information about each individual EEG channel. This should be filled with

info["chs"]. Refer tomne.Infofor more details.n_times (int) – Number of time samples of the input window.

input_window_seconds (float) – Length of the input window in seconds.

sfreq (float) – Sampling frequency of the EEG recordings.

n_bands (int, default=9) – Number of input channels (e.g., number of frequency bands).

n_filters_spat (int, default=36) – Number of output channels from the MixedConv2d layer.

temporal_layer (str, default='LogVarLayer') – Temporal aggregation layer to use.

n_dim – The description is missing.

stride_factor (int, default=4) – Stride factor for temporal segmentation.

dilatability (int, default=8) – Expansion factor for the spatial convolution block.

activation (nn.Module, default=nn.SiLU) – Activation function class to apply.

kernels_weights – The description is missing.

cnn_max_norm – The description is missing.

linear_max_norm – The description is missing.

verbose (bool, default False) – Verbose parameter to create the filter using mne.

filter_parameters – The description is missing.

- Raises:

ValueError – If some input signal-related parameters are not specified: and can not be inferred.

Notes

This implementation is not guaranteed to be correct and has not been checked by the original authors; it has only been reimplemented from the paper description and source code [fbmsnetcode]. There is an extra layer here to compute the filterbank during bash time and not on data time. This avoids data-leak, and allows the model to follow the braindecode convention.

References